diff --git a/.gitignore b/.gitignore

index c18dd8d..252b02d 100644

--- a/.gitignore

+++ b/.gitignore

@@ -1 +1,2 @@

__pycache__/

+suno_bark.egg-info/

diff --git a/LICENSE b/LICENSE

index d1bbe80..11aac3a 100644

--- a/LICENSE

+++ b/LICENSE

@@ -1,400 +1,21 @@

-

-Attribution-NonCommercial 4.0 International

-

-=======================================================================

-

-Creative Commons Corporation ("Creative Commons") is not a law firm and

-does not provide legal services or legal advice. Distribution of

-Creative Commons public licenses does not create a lawyer-client or

-other relationship. Creative Commons makes its licenses and related

-information available on an "as-is" basis. Creative Commons gives no

-warranties regarding its licenses, any material licensed under their

-terms and conditions, or any related information. Creative Commons

-disclaims all liability for damages resulting from their use to the

-fullest extent possible.

-

-Using Creative Commons Public Licenses

-

-Creative Commons public licenses provide a standard set of terms and

-conditions that creators and other rights holders may use to share

-original works of authorship and other material subject to copyright

-and certain other rights specified in the public license below. The

-following considerations are for informational purposes only, are not

-exhaustive, and do not form part of our licenses.

-

- Considerations for licensors: Our public licenses are

- intended for use by those authorized to give the public

- permission to use material in ways otherwise restricted by

- copyright and certain other rights. Our licenses are

- irrevocable. Licensors should read and understand the terms

- and conditions of the license they choose before applying it.

- Licensors should also secure all rights necessary before

- applying our licenses so that the public can reuse the

- material as expected. Licensors should clearly mark any

- material not subject to the license. This includes other CC-

- licensed material, or material used under an exception or

- limitation to copyright. More considerations for licensors:

- wiki.creativecommons.org/Considerations_for_licensors

-

- Considerations for the public: By using one of our public

- licenses, a licensor grants the public permission to use the

- licensed material under specified terms and conditions. If

- the licensor's permission is not necessary for any reason--for

- example, because of any applicable exception or limitation to

- copyright--then that use is not regulated by the license. Our

- licenses grant only permissions under copyright and certain

- other rights that a licensor has authority to grant. Use of

- the licensed material may still be restricted for other

- reasons, including because others have copyright or other

- rights in the material. A licensor may make special requests,

- such as asking that all changes be marked or described.

- Although not required by our licenses, you are encouraged to

- respect those requests where reasonable. More_considerations

- for the public:

- wiki.creativecommons.org/Considerations_for_licensees

-

-=======================================================================

-

-Creative Commons Attribution-NonCommercial 4.0 International Public

-License

-

-By exercising the Licensed Rights (defined below), You accept and agree

-to be bound by the terms and conditions of this Creative Commons

-Attribution-NonCommercial 4.0 International Public License ("Public

-License"). To the extent this Public License may be interpreted as a

-contract, You are granted the Licensed Rights in consideration of Your

-acceptance of these terms and conditions, and the Licensor grants You

-such rights in consideration of benefits the Licensor receives from

-making the Licensed Material available under these terms and

-conditions.

-

-Section 1 -- Definitions.

-

- a. Adapted Material means material subject to Copyright and Similar

- Rights that is derived from or based upon the Licensed Material

- and in which the Licensed Material is translated, altered,

- arranged, transformed, or otherwise modified in a manner requiring

- permission under the Copyright and Similar Rights held by the

- Licensor. For purposes of this Public License, where the Licensed

- Material is a musical work, performance, or sound recording,

- Adapted Material is always produced where the Licensed Material is

- synched in timed relation with a moving image.

-

- b. Adapter's License means the license You apply to Your Copyright

- and Similar Rights in Your contributions to Adapted Material in

- accordance with the terms and conditions of this Public License.

-

- c. Copyright and Similar Rights means copyright and/or similar rights

- closely related to copyright including, without limitation,

- performance, broadcast, sound recording, and Sui Generis Database

- Rights, without regard to how the rights are labeled or

- categorized. For purposes of this Public License, the rights

- specified in Section 2(b)(1)-(2) are not Copyright and Similar

- Rights.

- d. Effective Technological Measures means those measures that, in the

- absence of proper authority, may not be circumvented under laws

- fulfilling obligations under Article 11 of the WIPO Copyright

- Treaty adopted on December 20, 1996, and/or similar international

- agreements.

-

- e. Exceptions and Limitations means fair use, fair dealing, and/or

- any other exception or limitation to Copyright and Similar Rights

- that applies to Your use of the Licensed Material.

-

- f. Licensed Material means the artistic or literary work, database,

- or other material to which the Licensor applied this Public

- License.

-

- g. Licensed Rights means the rights granted to You subject to the

- terms and conditions of this Public License, which are limited to

- all Copyright and Similar Rights that apply to Your use of the

- Licensed Material and that the Licensor has authority to license.

-

- h. Licensor means the individual(s) or entity(ies) granting rights

- under this Public License.

-

- i. NonCommercial means not primarily intended for or directed towards

- commercial advantage or monetary compensation. For purposes of

- this Public License, the exchange of the Licensed Material for

- other material subject to Copyright and Similar Rights by digital

- file-sharing or similar means is NonCommercial provided there is

- no payment of monetary compensation in connection with the

- exchange.

-

- j. Share means to provide material to the public by any means or

- process that requires permission under the Licensed Rights, such

- as reproduction, public display, public performance, distribution,

- dissemination, communication, or importation, and to make material

- available to the public including in ways that members of the

- public may access the material from a place and at a time

- individually chosen by them.

-

- k. Sui Generis Database Rights means rights other than copyright

- resulting from Directive 96/9/EC of the European Parliament and of

- the Council of 11 March 1996 on the legal protection of databases,

- as amended and/or succeeded, as well as other essentially

- equivalent rights anywhere in the world.

-

- l. You means the individual or entity exercising the Licensed Rights

- under this Public License. Your has a corresponding meaning.

-

-Section 2 -- Scope.

-

- a. License grant.

-

- 1. Subject to the terms and conditions of this Public License,

- the Licensor hereby grants You a worldwide, royalty-free,

- non-sublicensable, non-exclusive, irrevocable license to

- exercise the Licensed Rights in the Licensed Material to:

-

- a. reproduce and Share the Licensed Material, in whole or

- in part, for NonCommercial purposes only; and

-

- b. produce, reproduce, and Share Adapted Material for

- NonCommercial purposes only.

-

- 2. Exceptions and Limitations. For the avoidance of doubt, where

- Exceptions and Limitations apply to Your use, this Public

- License does not apply, and You do not need to comply with

- its terms and conditions.

-

- 3. Term. The term of this Public License is specified in Section

- 6(a).

-

- 4. Media and formats; technical modifications allowed. The

- Licensor authorizes You to exercise the Licensed Rights in

- all media and formats whether now known or hereafter created,

- and to make technical modifications necessary to do so. The

- Licensor waives and/or agrees not to assert any right or

- authority to forbid You from making technical modifications

- necessary to exercise the Licensed Rights, including

- technical modifications necessary to circumvent Effective

- Technological Measures. For purposes of this Public License,

- simply making modifications authorized by this Section 2(a)

- (4) never produces Adapted Material.

-

- 5. Downstream recipients.

-

- a. Offer from the Licensor -- Licensed Material. Every

- recipient of the Licensed Material automatically

- receives an offer from the Licensor to exercise the

- Licensed Rights under the terms and conditions of this

- Public License.

-

- b. No downstream restrictions. You may not offer or impose

- any additional or different terms or conditions on, or

- apply any Effective Technological Measures to, the

- Licensed Material if doing so restricts exercise of the

- Licensed Rights by any recipient of the Licensed

- Material.

-

- 6. No endorsement. Nothing in this Public License constitutes or

- may be construed as permission to assert or imply that You

- are, or that Your use of the Licensed Material is, connected

- with, or sponsored, endorsed, or granted official status by,

- the Licensor or others designated to receive attribution as

- provided in Section 3(a)(1)(A)(i).

-

- b. Other rights.

-

- 1. Moral rights, such as the right of integrity, are not

- licensed under this Public License, nor are publicity,

- privacy, and/or other similar personality rights; however, to

- the extent possible, the Licensor waives and/or agrees not to

- assert any such rights held by the Licensor to the limited

- extent necessary to allow You to exercise the Licensed

- Rights, but not otherwise.

-

- 2. Patent and trademark rights are not licensed under this

- Public License.

-

- 3. To the extent possible, the Licensor waives any right to

- collect royalties from You for the exercise of the Licensed

- Rights, whether directly or through a collecting society

- under any voluntary or waivable statutory or compulsory

- licensing scheme. In all other cases the Licensor expressly

- reserves any right to collect such royalties, including when

- the Licensed Material is used other than for NonCommercial

- purposes.

-

-Section 3 -- License Conditions.

-

-Your exercise of the Licensed Rights is expressly made subject to the

-following conditions.

-

- a. Attribution.

-

- 1. If You Share the Licensed Material (including in modified

- form), You must:

-

- a. retain the following if it is supplied by the Licensor

- with the Licensed Material:

-

- i. identification of the creator(s) of the Licensed

- Material and any others designated to receive

- attribution, in any reasonable manner requested by

- the Licensor (including by pseudonym if

- designated);

-

- ii. a copyright notice;

-

- iii. a notice that refers to this Public License;

-

- iv. a notice that refers to the disclaimer of

- warranties;

-

- v. a URI or hyperlink to the Licensed Material to the

- extent reasonably practicable;

-

- b. indicate if You modified the Licensed Material and

- retain an indication of any previous modifications; and

-

- c. indicate the Licensed Material is licensed under this

- Public License, and include the text of, or the URI or

- hyperlink to, this Public License.

-

- 2. You may satisfy the conditions in Section 3(a)(1) in any

- reasonable manner based on the medium, means, and context in

- which You Share the Licensed Material. For example, it may be

- reasonable to satisfy the conditions by providing a URI or

- hyperlink to a resource that includes the required

- information.

-

- 3. If requested by the Licensor, You must remove any of the

- information required by Section 3(a)(1)(A) to the extent

- reasonably practicable.

-

- 4. If You Share Adapted Material You produce, the Adapter's

- License You apply must not prevent recipients of the Adapted

- Material from complying with this Public License.

-

-Section 4 -- Sui Generis Database Rights.

-

-Where the Licensed Rights include Sui Generis Database Rights that

-apply to Your use of the Licensed Material:

-

- a. for the avoidance of doubt, Section 2(a)(1) grants You the right

- to extract, reuse, reproduce, and Share all or a substantial

- portion of the contents of the database for NonCommercial purposes

- only;

-

- b. if You include all or a substantial portion of the database

- contents in a database in which You have Sui Generis Database

- Rights, then the database in which You have Sui Generis Database

- Rights (but not its individual contents) is Adapted Material; and

-

- c. You must comply with the conditions in Section 3(a) if You Share

- all or a substantial portion of the contents of the database.

-

-For the avoidance of doubt, this Section 4 supplements and does not

-replace Your obligations under this Public License where the Licensed

-Rights include other Copyright and Similar Rights.

-

-Section 5 -- Disclaimer of Warranties and Limitation of Liability.

-

- a. UNLESS OTHERWISE SEPARATELY UNDERTAKEN BY THE LICENSOR, TO THE

- EXTENT POSSIBLE, THE LICENSOR OFFERS THE LICENSED MATERIAL AS-IS

- AND AS-AVAILABLE, AND MAKES NO REPRESENTATIONS OR WARRANTIES OF

- ANY KIND CONCERNING THE LICENSED MATERIAL, WHETHER EXPRESS,

- IMPLIED, STATUTORY, OR OTHER. THIS INCLUDES, WITHOUT LIMITATION,

- WARRANTIES OF TITLE, MERCHANTABILITY, FITNESS FOR A PARTICULAR

- PURPOSE, NON-INFRINGEMENT, ABSENCE OF LATENT OR OTHER DEFECTS,

- ACCURACY, OR THE PRESENCE OR ABSENCE OF ERRORS, WHETHER OR NOT

- KNOWN OR DISCOVERABLE. WHERE DISCLAIMERS OF WARRANTIES ARE NOT

- ALLOWED IN FULL OR IN PART, THIS DISCLAIMER MAY NOT APPLY TO YOU.

-

- b. TO THE EXTENT POSSIBLE, IN NO EVENT WILL THE LICENSOR BE LIABLE

- TO YOU ON ANY LEGAL THEORY (INCLUDING, WITHOUT LIMITATION,

- NEGLIGENCE) OR OTHERWISE FOR ANY DIRECT, SPECIAL, INDIRECT,

- INCIDENTAL, CONSEQUENTIAL, PUNITIVE, EXEMPLARY, OR OTHER LOSSES,

- COSTS, EXPENSES, OR DAMAGES ARISING OUT OF THIS PUBLIC LICENSE OR

- USE OF THE LICENSED MATERIAL, EVEN IF THE LICENSOR HAS BEEN

- ADVISED OF THE POSSIBILITY OF SUCH LOSSES, COSTS, EXPENSES, OR

- DAMAGES. WHERE A LIMITATION OF LIABILITY IS NOT ALLOWED IN FULL OR

- IN PART, THIS LIMITATION MAY NOT APPLY TO YOU.

-

- c. The disclaimer of warranties and limitation of liability provided

- above shall be interpreted in a manner that, to the extent

- possible, most closely approximates an absolute disclaimer and

- waiver of all liability.

-

-Section 6 -- Term and Termination.

-

- a. This Public License applies for the term of the Copyright and

- Similar Rights licensed here. However, if You fail to comply with

- this Public License, then Your rights under this Public License

- terminate automatically.

-

- b. Where Your right to use the Licensed Material has terminated under

- Section 6(a), it reinstates:

-

- 1. automatically as of the date the violation is cured, provided

- it is cured within 30 days of Your discovery of the

- violation; or

-

- 2. upon express reinstatement by the Licensor.

-

- For the avoidance of doubt, this Section 6(b) does not affect any

- right the Licensor may have to seek remedies for Your violations

- of this Public License.

-

- c. For the avoidance of doubt, the Licensor may also offer the

- Licensed Material under separate terms or conditions or stop

- distributing the Licensed Material at any time; however, doing so

- will not terminate this Public License.

-

- d. Sections 1, 5, 6, 7, and 8 survive termination of this Public

- License.

-

-Section 7 -- Other Terms and Conditions.

-

- a. The Licensor shall not be bound by any additional or different

- terms or conditions communicated by You unless expressly agreed.

-

- b. Any arrangements, understandings, or agreements regarding the

- Licensed Material not stated herein are separate from and

- independent of the terms and conditions of this Public License.

-

-Section 8 -- Interpretation.

-

- a. For the avoidance of doubt, this Public License does not, and

- shall not be interpreted to, reduce, limit, restrict, or impose

- conditions on any use of the Licensed Material that could lawfully

- be made without permission under this Public License.

-

- b. To the extent possible, if any provision of this Public License is

- deemed unenforceable, it shall be automatically reformed to the

- minimum extent necessary to make it enforceable. If the provision

- cannot be reformed, it shall be severed from this Public License

- without affecting the enforceability of the remaining terms and

- conditions.

-

- c. No term or condition of this Public License will be waived and no

- failure to comply consented to unless expressly agreed to by the

- Licensor.

-

- d. Nothing in this Public License constitutes or may be interpreted

- as a limitation upon, or waiver of, any privileges and immunities

- that apply to the Licensor or You, including from the legal

- processes of any jurisdiction or authority.

-

-=======================================================================

-

-Creative Commons is not a party to its public

-licenses. Notwithstanding, Creative Commons may elect to apply one of

-its public licenses to material it publishes and in those instances

-will be considered the “Licensor.” The text of the Creative Commons

-public licenses is dedicated to the public domain under the CC0 Public

-Domain Dedication. Except for the limited purpose of indicating that

-material is shared under a Creative Commons public license or as

-otherwise permitted by the Creative Commons policies published at

-creativecommons.org/policies, Creative Commons does not authorize the

-use of the trademark "Creative Commons" or any other trademark or logo

-of Creative Commons without its prior written consent including,

-without limitation, in connection with any unauthorized modifications

-to any of its public licenses or any other arrangements,

-understandings, or agreements concerning use of licensed material. For

-the avoidance of doubt, this paragraph does not form part of the

-public licenses.

-

-Creative Commons may be contacted at creativecommons.org.

+MIT License

+

+Copyright (c) Suno, Inc

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

diff --git a/README.md b/README.md

index a44887b..4b35eaf 100644

--- a/README.md

+++ b/README.md

@@ -1,27 +1,53 @@

# 🐶 Bark

- +[](https://discord.gg/J2B2vsjKuE)

[](https://twitter.com/OnusFM)

-[](https://discord.gg/J2B2vsjKuE)

+

+[](https://discord.gg/J2B2vsjKuE)

[](https://twitter.com/OnusFM)

-[](https://discord.gg/J2B2vsjKuE)

+ +[Examples](https://suno-ai.notion.site/Bark-Examples-5edae8b02a604b54a42244ba45ebc2e2) • [Suno Studio Waitlist](https://3os84zs17th.typeform.com/suno-studio) • [Updates](#-updates) • [How to Use](#-usage-in-python) • [Installation](#-installation) • [FAQ](#-faq)

-[Examples](https://suno-ai.notion.site/Bark-Examples-5edae8b02a604b54a42244ba45ebc2e2) | [Model Card](./model-card.md) | [Playground Waitlist](https://3os84zs17th.typeform.com/suno-studio)

-

-Bark is a transformer-based text-to-audio model created by [Suno](https://suno.ai). Bark can generate highly realistic, multilingual speech as well as other audio - including music, background noise and simple sound effects. The model can also produce nonverbal communications like laughing, sighing and crying. To support the research community, we are providing access to pretrained model checkpoints ready for inference.

-

+[//]:

+[Examples](https://suno-ai.notion.site/Bark-Examples-5edae8b02a604b54a42244ba45ebc2e2) • [Suno Studio Waitlist](https://3os84zs17th.typeform.com/suno-studio) • [Updates](#-updates) • [How to Use](#-usage-in-python) • [Installation](#-installation) • [FAQ](#-faq)

-[Examples](https://suno-ai.notion.site/Bark-Examples-5edae8b02a604b54a42244ba45ebc2e2) | [Model Card](./model-card.md) | [Playground Waitlist](https://3os84zs17th.typeform.com/suno-studio)

-

-Bark is a transformer-based text-to-audio model created by [Suno](https://suno.ai). Bark can generate highly realistic, multilingual speech as well as other audio - including music, background noise and simple sound effects. The model can also produce nonverbal communications like laughing, sighing and crying. To support the research community, we are providing access to pretrained model checkpoints ready for inference.

-

+[//]:

(vertical spaces around image)

+

- +

+

+

-## 🔊 Demos

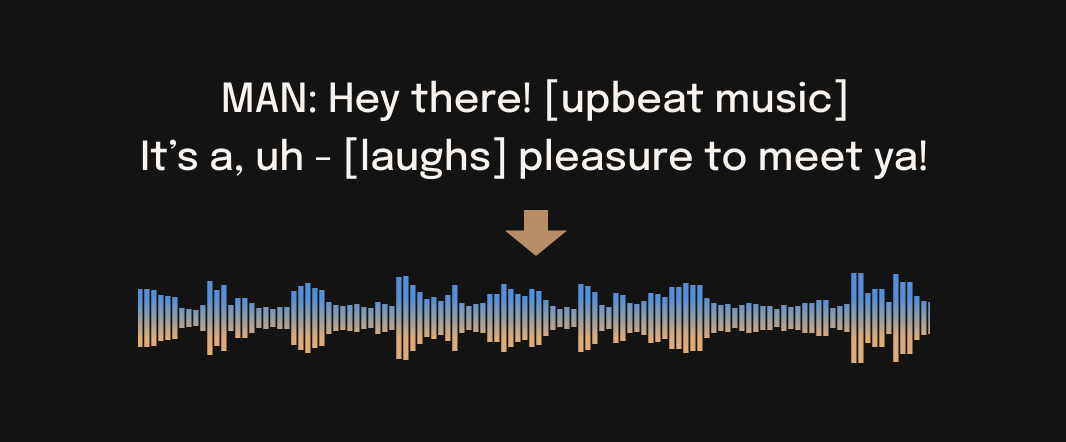

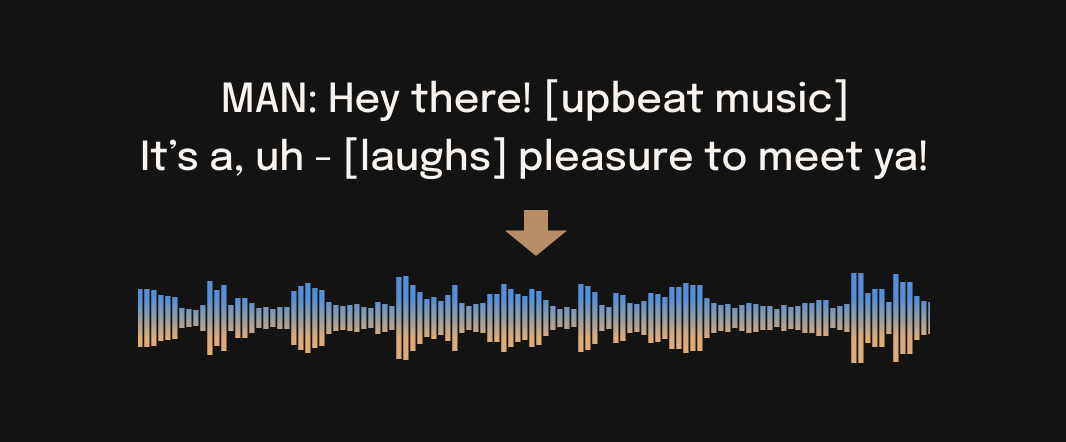

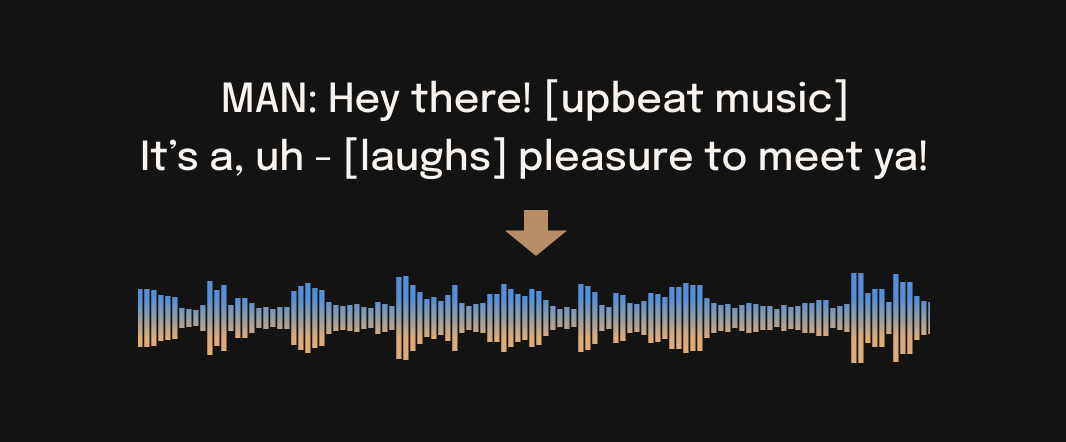

+Bark is a transformer-based text-to-audio model created by [Suno](https://suno.ai). Bark can generate highly realistic, multilingual speech as well as other audio - including music, background noise and simple sound effects. The model can also produce nonverbal communications like laughing, sighing and crying. To support the research community, we are providing access to pretrained model checkpoints, which are ready for inference and available for commercial use.

-[](https://huggingface.co/spaces/suno/bark)

+## ⚠ Disclaimer

+Bark was developed for research purposes. It is not a conventional text-to-speech model but instead a fully generative text-to-audio model, which can deviate in unexpected ways from provided prompts. Suno does not take responsibility for any output generated. Use at your own risk, and please act responsibly.

+

+## 🎧 Demos

+

+[](https://huggingface.co/spaces/suno/bark)

+[](https://replicate.com/suno-ai/bark)

[](https://colab.research.google.com/drive/1eJfA2XUa-mXwdMy7DoYKVYHI1iTd9Vkt?usp=sharing)

-## 🤖 Usage

+## 🚀 Updates

+

+**2023.05.01**

+- ©️ Bark is now licensed under the MIT License, meaning it's now available for commercial use!

+- ⚡ 2x speed-up on GPU. 10x speed-up on CPU. We also added an option for a smaller version of Bark, which offers additional speed-up with the trade-off of slightly lower quality.

+- 📕 [Long-form generation](notebooks/long_form_generation.ipynb), voice consistency enhancements and other examples are now documented in a new [notebooks](./notebooks) section.

+- 👥 We created a [voice prompt library](https://suno-ai.notion.site/8b8e8749ed514b0cbf3f699013548683?v=bc67cff786b04b50b3ceb756fd05f68c). We hope this resource helps you find useful prompts for your use cases! You can also join us on [Discord](https://discord.gg/J2B2vsjKuE), where the community actively shares useful prompts in the **#audio-prompts** channel.

+- 💬 Growing community support and access to new features here:

+

+ [](https://discord.gg/J2B2vsjKuE)

+

+- 💾 You can now use Bark with GPUs that have low VRAM (<4GB).

+

+**2023.04.20**

+- 🐶 Bark release!

+

+## 🐍 Usage in Python

+

+

+ 🪑 Basics

```python

from bark import SAMPLE_RATE, generate_audio, preload_models

+from scipy.io.wavfile import write as write_wav

from IPython.display import Audio

# download and load all models

@@ -34,38 +60,42 @@ text_prompt = """

"""

audio_array = generate_audio(text_prompt)

+# save audio to disk

+write_wav("bark_generation.wav", SAMPLE_RATE, audio_array)

+

# play text in notebook

Audio(audio_array, rate=SAMPLE_RATE)

```

[pizza.webm](https://user-images.githubusercontent.com/5068315/230490503-417e688d-5115-4eee-9550-b46a2b465ee3.webm)

+

-To save `audio_array` as a WAV file:

-

-```python

-from scipy.io.wavfile import write as write_wav

-

-write_wav("/path/to/audio.wav", SAMPLE_RATE, audio_array)

-```

-

-### 🌎 Foreign Language

-

+

+ 🌎 Foreign Language

+

Bark supports various languages out-of-the-box and automatically determines language from input text. When prompted with code-switched text, Bark will attempt to employ the native accent for the respective languages. English quality is best for the time being, and we expect other languages to further improve with scaling.

+

+

```python

+

text_prompt = """

- Buenos días Miguel. Tu colega piensa que tu alemán es extremadamente malo.

- But I suppose your english isn't terrible.

+ 추석은 내가 가장 좋아하는 명절이다. 나는 며칠 동안 휴식을 취하고 친구 및 가족과 시간을 보낼 수 있습니다.

"""

audio_array = generate_audio(text_prompt)

```

+[suno_korean.webm](https://user-images.githubusercontent.com/32879321/235313033-dc4477b9-2da0-4b94-9c8b-a8c2d8f5bb5e.webm)

+

+*Note: since Bark recognizes languages automatically from input text, it is possible to use for example a german history prompt with english text. This usually leads to english audio with a german accent.*

-[miguel.webm](https://user-images.githubusercontent.com/5068315/230684752-10baadfe-1e7c-46a2-8323-43282aef2c8c.webm)

-

-### 🎶 Music

+

+

+ 🎶 Music

Bark can generate all types of audio, and, in principle, doesn't see a difference between speech and music. Sometimes Bark chooses to generate text as music, but you can help it out by adding music notes around your lyrics.

+

+

```python

text_prompt = """

@@ -73,39 +103,45 @@ text_prompt = """

"""

audio_array = generate_audio(text_prompt)

```

-

[lion.webm](https://user-images.githubusercontent.com/5068315/230684766-97f5ea23-ad99-473c-924b-66b6fab24289.webm)

+

-### 🎤 Voice Presets and Voice/Audio Cloning

+

+🎤 Voice Presets

+

+Bark supports 100+ speaker presets across [supported languages](#supported-languages). You can browse the library of speaker presets [here](https://suno-ai.notion.site/8b8e8749ed514b0cbf3f699013548683?v=bc67cff786b04b50b3ceb756fd05f68c), or in the [code](bark/assets/prompts). The community also often shares presets in [Discord](https://discord.gg/J2B2vsjKuE).

-Bark has the capability to fully clone voices - including tone, pitch, emotion and prosody. The model also attempts to preserve music, ambient noise, etc. from input audio. However, to mitigate misuse of this technology, we limit the audio history prompts to a limited set of Suno-provided, fully synthetic options to choose from for each language. Specify following the pattern: `{lang_code}_speaker_{0-9}`.

+Bark tries to match the tone, pitch, emotion and prosody of a given preset, but does not currently support custom voice cloning. The model also attempts to preserve music, ambient noise, etc.

+

+

```python

text_prompt = """

I have a silky smooth voice, and today I will tell you about

the exercise regimen of the common sloth.

"""

-audio_array = generate_audio(text_prompt, history_prompt="en_speaker_1")

+audio_array = generate_audio(text_prompt, history_prompt="v2/en_speaker_1")

```

-

[sloth.webm](https://user-images.githubusercontent.com/5068315/230684883-a344c619-a560-4ff5-8b99-b4463a34487b.webm)

+

-*Note: since Bark recognizes languages automatically from input text, it is possible to use for example a german history prompt with english text. This usually leads to english audio with a german accent.*

+### Generating Longer Audio

+

+By default, `generate_audio` works well with around 13 seconds of spoken text. For an example of how to do long-form generation, see this [example notebook](notebooks/long_form_generation.ipynb).

-### 👥 Speaker Prompts

+

+Click to toggle example long-form generations (from the example notebook)

-You can provide certain speaker prompts such as NARRATOR, MAN, WOMAN, etc. Please note that these are not always respected, especially if a conflicting audio history prompt is given.

+[dialog.webm](https://user-images.githubusercontent.com/2565833/235463539-f57608da-e4cb-4062-8771-148e29512b01.webm)

+

+[longform_advanced.webm](https://user-images.githubusercontent.com/2565833/235463547-1c0d8744-269b-43fe-9630-897ea5731652.webm)

+

+[longform_basic.webm](https://user-images.githubusercontent.com/2565833/235463559-87efe9f8-a2db-4d59-b764-57db83f95270.webm)

+

+

-```python

-text_prompt = """

- WOMAN: I would like an oatmilk latte please.

- MAN: Wow, that's expensive!

-"""

-audio_array = generate_audio(text_prompt)

-```

-[latte.webm](https://user-images.githubusercontent.com/5068315/230684864-12d101a1-a726-471d-9d56-d18b108efcb8.webm)

## 💻 Installation

@@ -120,20 +156,23 @@ or

git clone https://github.com/suno-ai/bark

cd bark && pip install .

```

+*Note: Do NOT use 'pip install bark'. It installs a different package, which is not managed by Suno.*

+

## 🛠️ Hardware and Inference Speed

Bark has been tested and works on both CPU and GPU (`pytorch 2.0+`, CUDA 11.7 and CUDA 12.0).

-Running Bark requires running >100M parameter transformer models.

-On modern GPUs and PyTorch nightly, Bark can generate audio in roughly realtime. On older GPUs, default colab, or CPU, inference time might be 10-100x slower.

-If you don't have new hardware available or if you want to play with bigger versions of our models, you can also sign up for early access to our model playground [here](https://3os84zs17th.typeform.com/suno-studio).

+On enterprise GPUs and PyTorch nightly, Bark can generate audio in roughly real-time. On older GPUs, default colab, or CPU, inference time might be significantly slower. For older GPUs or CPU you might want to consider using smaller models. Details can be found in out tutorial sections here.

+

+The full version of Bark requires around 12GB of VRAM to hold everything on GPU at the same time.

+To use a smaller version of the models, which should fit into 8GB VRAM, set the environment flag `SUNO_USE_SMALL_MODELS=True`.

+

+If you don't have hardware available or if you want to play with bigger versions of our models, you can also sign up for early access to our model playground [here](https://3os84zs17th.typeform.com/suno-studio).

## ⚙️ Details

-Similar to [Vall-E](https://arxiv.org/abs/2301.02111) and some other amazing work in the field, Bark uses GPT-style

-models to generate audio from scratch. Different from Vall-E, the initial text prompt is embedded into high-level semantic tokens without the use of phonemes. It can therefore generalize to arbitrary instructions beyond speech that occur in the training data, such as music lyrics, sound effects or other non-speech sounds. A subsequent second model is used to convert the generated semantic tokens into audio codec tokens to generate the full waveform. To enable the community to use Bark via public code we used the fantastic

-[EnCodec codec](https://github.com/facebookresearch/encodec) from Facebook to act as an audio representation.

+Bark is fully generative tex-to-audio model devolved for research and demo purposes. It follows a GPT style architecture similar to [AudioLM](https://arxiv.org/abs/2209.03143) and [Vall-E](https://arxiv.org/abs/2301.02111) and a quantized Audio representation from [EnCodec](https://github.com/facebookresearch/encodec). It is not a conventional TTS model, but instead a fully generative text-to-audio model capable of deviating in unexpected ways from any given script. Different to previous approaches, the input text prompt is converted directly to audio without the intermediate use of phonemes. It can therefore generalize to arbitrary instructions beyond speech such as music lyrics, sound effects or other non-speech sounds.

Below is a list of some known non-speech sounds, but we are finding more every day. Please let us know if you find patterns that work particularly well on [Discord](https://discord.gg/J2B2vsjKuE)!

@@ -145,10 +184,10 @@ Below is a list of some known non-speech sounds, but we are finding more every d

- `[clears throat]`

- `—` or `...` for hesitations

- `♪` for song lyrics

-- capitalization for emphasis of a word

-- `MAN/WOMAN:` for bias towards speaker

+- CAPITALIZATION for emphasis of a word

+- `[MAN]` and `[WOMAN]` to bias Bark toward male and female speakers, respectively

-**Supported Languages**

+### Supported Languages

| Language | Status |

| --- | --- |

@@ -165,22 +204,21 @@ Below is a list of some known non-speech sounds, but we are finding more every d

| Russian (ru) | ✅ |

| Turkish (tr) | ✅ |

| Chinese, simplified (zh) | ✅ |

-| Arabic | Coming soon! |

-| Bengali | Coming soon! |

-| Telugu | Coming soon! |

+

+Requests for future language support [here](https://github.com/suno-ai/bark/discussions/111) or in the **#forums** channel on [Discord](https://discord.com/invite/J2B2vsjKuE).

## 🙏 Appreciation

- [nanoGPT](https://github.com/karpathy/nanoGPT) for a dead-simple and blazing fast implementation of GPT-style models

- [EnCodec](https://github.com/facebookresearch/encodec) for a state-of-the-art implementation of a fantastic audio codec

-- [AudioLM](https://github.com/lucidrains/audiolm-pytorch) for very related training and inference code

+- [AudioLM](https://github.com/lucidrains/audiolm-pytorch) for related training and inference code

- [Vall-E](https://arxiv.org/abs/2301.02111), [AudioLM](https://arxiv.org/abs/2209.03143) and many other ground-breaking papers that enabled the development of Bark

## © License

-Bark is licensed under a non-commercial license: CC-BY 4.0 NC. The Suno models themselves may be used commercially. However, this version of Bark uses `EnCodec` as a neural codec backend, which is licensed under a [non-commercial license](https://github.com/facebookresearch/encodec/blob/main/LICENSE).

+Bark is licensed under the MIT License.

-Please contact us at `bark@suno.ai` if you need access to a larger version of the model and/or a version of the model you can use commercially.

+Please contact us at `bark@suno.ai` to request access to a larger version of the model.

## 📱 Community

@@ -193,12 +231,29 @@ We’re developing a playground for our models, including Bark.

If you are interested, you can sign up for early access [here](https://3os84zs17th.typeform.com/suno-studio).

-## FAQ

+## ❓ FAQ

#### How do I specify where models are downloaded and cached?

+* Bark uses Hugging Face to download and store models. You can see find more info [here](https://huggingface.co/docs/huggingface_hub/package_reference/environment_variables#hfhome).

-Use the `XDG_CACHE_HOME` env variable to override where models are downloaded and cached (otherwise defaults to a subdirectory of `~/.cache`).

#### Bark's generations sometimes differ from my prompts. What's happening?

+* Bark is a GPT-style model. As such, it may take some creative liberties in its generations, resulting in higher-variance model outputs than traditional text-to-speech approaches.

-Bark is a GPT-style model. As such, it may take some creative liberties in its generations, resulting in higher-variance model outputs than traditional text-to-speech approaches.

+#### What voices are supported by Bark?

+* Bark supports 100+ speaker presets across [supported languages](#supported-languages). You can browse the library of speaker presets [here](https://suno-ai.notion.site/8b8e8749ed514b0cbf3f699013548683?v=bc67cff786b04b50b3ceb756fd05f68c). The community also shares presets in [Discord](https://discord.gg/J2B2vsjKuE). Bark also supports generating unique random voices that fit the input text. Bark does not currently support custom voice cloning.

+

+#### Why is the output limited to ~13-14 seconds?

+* Bark is a GPT-style model, and its architecture/context window is optimized to output generations with roughly this length.

+

+#### How much VRAM do I need?

+* The full version of Bark requires around 12Gb of memory to hold everything on GPU at the same time. However, even smaller cards down to ~2Gb work with some additional settings. Simply add the following code snippet before your generation:

+

+```python

+import os

+os.environ["SUNO_OFFLOAD_CPU"] = True

+os.environ["SUNO_USE_SMALL_MODELS"] = True

+```

+

+#### My generated audio sounds like a 1980s phone call. What's happening?

+* Bark generates audio from scratch. It is not meant to create only high-fidelity, studio-quality speech. Rather, outputs could be anything from perfect speech to multiple people arguing at a baseball game recorded with bad microphones.

diff --git a/bark/api.py b/bark/api.py

index e1c7556..7b646b7 100644

--- a/bark/api.py

+++ b/bark/api.py

@@ -1,4 +1,4 @@

-from typing import Optional

+from typing import Dict, Optional, Union

import numpy as np

@@ -7,7 +7,7 @@ from .generation import codec_decode, generate_coarse, generate_fine, generate_t

def text_to_semantic(

text: str,

- history_prompt: Optional[str] = None,

+ history_prompt: Optional[Union[Dict, str]] = None,

temp: float = 0.7,

silent: bool = False,

):

@@ -34,7 +34,7 @@ def text_to_semantic(

def semantic_to_waveform(

semantic_tokens: np.ndarray,

- history_prompt: Optional[str] = None,

+ history_prompt: Optional[Union[Dict, str]] = None,

temp: float = 0.7,

silent: bool = False,

output_full: bool = False,

@@ -85,7 +85,7 @@ def save_as_prompt(filepath, full_generation):

def generate_audio(

text: str,

- history_prompt: Optional[str] = None,

+ history_prompt: Optional[Union[Dict, str]] = None,

text_temp: float = 0.7,

waveform_temp: float = 0.7,

silent: bool = False,

diff --git a/bark/assets/prompts/readme.md b/bark/assets/prompts/readme.md

index 2844c37..b01ae91 100644

--- a/bark/assets/prompts/readme.md

+++ b/bark/assets/prompts/readme.md

@@ -1,5 +1,15 @@

# Example Prompts Data

+## Version Two

+The `v2` prompts are better engineered to follow text with a consistent voice.

+To use them, simply include `v2` in the prompt. For example

+```python

+from bark import generate_audio

+text_prompt = "madam I'm adam"

+audio_array = generate_audio(text_prompt, history_prompt="v2/en_speaker_1")

+```

+

+## Prompt Format

The provided data is in the .npz format, which is a file format used in Python for storing arrays and data. The data contains three arrays: semantic_prompt, coarse_prompt, and fine_prompt.

```semantic_prompt```

diff --git a/bark/assets/prompts/v2/de_speaker_0.npz b/bark/assets/prompts/v2/de_speaker_0.npz

new file mode 100644

index 0000000..0a10369

Binary files /dev/null and b/bark/assets/prompts/v2/de_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/de_speaker_1.npz b/bark/assets/prompts/v2/de_speaker_1.npz

new file mode 100644

index 0000000..da143ea

Binary files /dev/null and b/bark/assets/prompts/v2/de_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/de_speaker_2.npz b/bark/assets/prompts/v2/de_speaker_2.npz

new file mode 100644

index 0000000..26aef4d

Binary files /dev/null and b/bark/assets/prompts/v2/de_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/de_speaker_3.npz b/bark/assets/prompts/v2/de_speaker_3.npz

new file mode 100644

index 0000000..3bb685e

Binary files /dev/null and b/bark/assets/prompts/v2/de_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/de_speaker_4.npz b/bark/assets/prompts/v2/de_speaker_4.npz

new file mode 100644

index 0000000..7fae90c

Binary files /dev/null and b/bark/assets/prompts/v2/de_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/de_speaker_5.npz b/bark/assets/prompts/v2/de_speaker_5.npz

new file mode 100644

index 0000000..c5f2527

Binary files /dev/null and b/bark/assets/prompts/v2/de_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/de_speaker_6.npz b/bark/assets/prompts/v2/de_speaker_6.npz

new file mode 100644

index 0000000..5b26055

Binary files /dev/null and b/bark/assets/prompts/v2/de_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/de_speaker_7.npz b/bark/assets/prompts/v2/de_speaker_7.npz

new file mode 100644

index 0000000..f320937

Binary files /dev/null and b/bark/assets/prompts/v2/de_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/de_speaker_8.npz b/bark/assets/prompts/v2/de_speaker_8.npz

new file mode 100644

index 0000000..09bfc68

Binary files /dev/null and b/bark/assets/prompts/v2/de_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/de_speaker_9.npz b/bark/assets/prompts/v2/de_speaker_9.npz

new file mode 100644

index 0000000..bbbef85

Binary files /dev/null and b/bark/assets/prompts/v2/de_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/en_speaker_0.npz b/bark/assets/prompts/v2/en_speaker_0.npz

new file mode 100644

index 0000000..1ee9aee

Binary files /dev/null and b/bark/assets/prompts/v2/en_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/en_speaker_1.npz b/bark/assets/prompts/v2/en_speaker_1.npz

new file mode 100644

index 0000000..381f175

Binary files /dev/null and b/bark/assets/prompts/v2/en_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/en_speaker_2.npz b/bark/assets/prompts/v2/en_speaker_2.npz

new file mode 100644

index 0000000..015b2e5

Binary files /dev/null and b/bark/assets/prompts/v2/en_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/en_speaker_3.npz b/bark/assets/prompts/v2/en_speaker_3.npz

new file mode 100644

index 0000000..e77005a

Binary files /dev/null and b/bark/assets/prompts/v2/en_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/en_speaker_4.npz b/bark/assets/prompts/v2/en_speaker_4.npz

new file mode 100644

index 0000000..c75e9e6

Binary files /dev/null and b/bark/assets/prompts/v2/en_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/en_speaker_5.npz b/bark/assets/prompts/v2/en_speaker_5.npz

new file mode 100644

index 0000000..6a82e9a

Binary files /dev/null and b/bark/assets/prompts/v2/en_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/en_speaker_6.npz b/bark/assets/prompts/v2/en_speaker_6.npz

new file mode 100644

index 0000000..fd1d9a0

Binary files /dev/null and b/bark/assets/prompts/v2/en_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/en_speaker_7.npz b/bark/assets/prompts/v2/en_speaker_7.npz

new file mode 100644

index 0000000..3e91009

Binary files /dev/null and b/bark/assets/prompts/v2/en_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/en_speaker_8.npz b/bark/assets/prompts/v2/en_speaker_8.npz

new file mode 100644

index 0000000..5e5077b

Binary files /dev/null and b/bark/assets/prompts/v2/en_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/en_speaker_9.npz b/bark/assets/prompts/v2/en_speaker_9.npz

new file mode 100644

index 0000000..c8798ed

Binary files /dev/null and b/bark/assets/prompts/v2/en_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/es_speaker_0.npz b/bark/assets/prompts/v2/es_speaker_0.npz

new file mode 100644

index 0000000..11877a1

Binary files /dev/null and b/bark/assets/prompts/v2/es_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/es_speaker_1.npz b/bark/assets/prompts/v2/es_speaker_1.npz

new file mode 100644

index 0000000..1daabec

Binary files /dev/null and b/bark/assets/prompts/v2/es_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/es_speaker_2.npz b/bark/assets/prompts/v2/es_speaker_2.npz

new file mode 100644

index 0000000..ecbe04c

Binary files /dev/null and b/bark/assets/prompts/v2/es_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/es_speaker_3.npz b/bark/assets/prompts/v2/es_speaker_3.npz

new file mode 100644

index 0000000..1d02fd7

Binary files /dev/null and b/bark/assets/prompts/v2/es_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/es_speaker_4.npz b/bark/assets/prompts/v2/es_speaker_4.npz

new file mode 100644

index 0000000..3881e0d

Binary files /dev/null and b/bark/assets/prompts/v2/es_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/es_speaker_5.npz b/bark/assets/prompts/v2/es_speaker_5.npz

new file mode 100644

index 0000000..bf67639

Binary files /dev/null and b/bark/assets/prompts/v2/es_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/es_speaker_6.npz b/bark/assets/prompts/v2/es_speaker_6.npz

new file mode 100644

index 0000000..4c0b79f

Binary files /dev/null and b/bark/assets/prompts/v2/es_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/es_speaker_7.npz b/bark/assets/prompts/v2/es_speaker_7.npz

new file mode 100644

index 0000000..d9bcde1

Binary files /dev/null and b/bark/assets/prompts/v2/es_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/es_speaker_8.npz b/bark/assets/prompts/v2/es_speaker_8.npz

new file mode 100644

index 0000000..ffc5483

Binary files /dev/null and b/bark/assets/prompts/v2/es_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/es_speaker_9.npz b/bark/assets/prompts/v2/es_speaker_9.npz

new file mode 100644

index 0000000..c68e682

Binary files /dev/null and b/bark/assets/prompts/v2/es_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/fr_speaker_0.npz b/bark/assets/prompts/v2/fr_speaker_0.npz

new file mode 100644

index 0000000..1868338

Binary files /dev/null and b/bark/assets/prompts/v2/fr_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/fr_speaker_1.npz b/bark/assets/prompts/v2/fr_speaker_1.npz

new file mode 100644

index 0000000..6b5a90b

Binary files /dev/null and b/bark/assets/prompts/v2/fr_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/fr_speaker_2.npz b/bark/assets/prompts/v2/fr_speaker_2.npz

new file mode 100644

index 0000000..68a7df3

Binary files /dev/null and b/bark/assets/prompts/v2/fr_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/fr_speaker_3.npz b/bark/assets/prompts/v2/fr_speaker_3.npz

new file mode 100644

index 0000000..1c01916

Binary files /dev/null and b/bark/assets/prompts/v2/fr_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/fr_speaker_4.npz b/bark/assets/prompts/v2/fr_speaker_4.npz

new file mode 100644

index 0000000..ca1cdd7

Binary files /dev/null and b/bark/assets/prompts/v2/fr_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/fr_speaker_5.npz b/bark/assets/prompts/v2/fr_speaker_5.npz

new file mode 100644

index 0000000..5f20543

Binary files /dev/null and b/bark/assets/prompts/v2/fr_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/fr_speaker_6.npz b/bark/assets/prompts/v2/fr_speaker_6.npz

new file mode 100644

index 0000000..31e98c6

Binary files /dev/null and b/bark/assets/prompts/v2/fr_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/fr_speaker_7.npz b/bark/assets/prompts/v2/fr_speaker_7.npz

new file mode 100644

index 0000000..bcae0a2

Binary files /dev/null and b/bark/assets/prompts/v2/fr_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/fr_speaker_8.npz b/bark/assets/prompts/v2/fr_speaker_8.npz

new file mode 100644

index 0000000..baa1c08

Binary files /dev/null and b/bark/assets/prompts/v2/fr_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/fr_speaker_9.npz b/bark/assets/prompts/v2/fr_speaker_9.npz

new file mode 100644

index 0000000..2b1e251

Binary files /dev/null and b/bark/assets/prompts/v2/fr_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/hi_speaker_0.npz b/bark/assets/prompts/v2/hi_speaker_0.npz

new file mode 100644

index 0000000..c455fd7

Binary files /dev/null and b/bark/assets/prompts/v2/hi_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/hi_speaker_1.npz b/bark/assets/prompts/v2/hi_speaker_1.npz

new file mode 100644

index 0000000..1c3f19b

Binary files /dev/null and b/bark/assets/prompts/v2/hi_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/hi_speaker_2.npz b/bark/assets/prompts/v2/hi_speaker_2.npz

new file mode 100644

index 0000000..7806586

Binary files /dev/null and b/bark/assets/prompts/v2/hi_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/hi_speaker_3.npz b/bark/assets/prompts/v2/hi_speaker_3.npz

new file mode 100644

index 0000000..2d74f31

Binary files /dev/null and b/bark/assets/prompts/v2/hi_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/hi_speaker_4.npz b/bark/assets/prompts/v2/hi_speaker_4.npz

new file mode 100644

index 0000000..46d877f

Binary files /dev/null and b/bark/assets/prompts/v2/hi_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/hi_speaker_5.npz b/bark/assets/prompts/v2/hi_speaker_5.npz

new file mode 100644

index 0000000..1bf5c8d

Binary files /dev/null and b/bark/assets/prompts/v2/hi_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/hi_speaker_6.npz b/bark/assets/prompts/v2/hi_speaker_6.npz

new file mode 100644

index 0000000..0e72810

Binary files /dev/null and b/bark/assets/prompts/v2/hi_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/hi_speaker_7.npz b/bark/assets/prompts/v2/hi_speaker_7.npz

new file mode 100644

index 0000000..2befa5a

Binary files /dev/null and b/bark/assets/prompts/v2/hi_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/hi_speaker_8.npz b/bark/assets/prompts/v2/hi_speaker_8.npz

new file mode 100644

index 0000000..3d1c4e9

Binary files /dev/null and b/bark/assets/prompts/v2/hi_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/hi_speaker_9.npz b/bark/assets/prompts/v2/hi_speaker_9.npz

new file mode 100644

index 0000000..730698a

Binary files /dev/null and b/bark/assets/prompts/v2/hi_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/it_speaker_0.npz b/bark/assets/prompts/v2/it_speaker_0.npz

new file mode 100644

index 0000000..c7132ba

Binary files /dev/null and b/bark/assets/prompts/v2/it_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/it_speaker_1.npz b/bark/assets/prompts/v2/it_speaker_1.npz

new file mode 100644

index 0000000..cee09c9

Binary files /dev/null and b/bark/assets/prompts/v2/it_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/it_speaker_2.npz b/bark/assets/prompts/v2/it_speaker_2.npz

new file mode 100644

index 0000000..cae1fe3

Binary files /dev/null and b/bark/assets/prompts/v2/it_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/it_speaker_3.npz b/bark/assets/prompts/v2/it_speaker_3.npz

new file mode 100644

index 0000000..f7e970b

Binary files /dev/null and b/bark/assets/prompts/v2/it_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/it_speaker_4.npz b/bark/assets/prompts/v2/it_speaker_4.npz

new file mode 100644

index 0000000..82888dd

Binary files /dev/null and b/bark/assets/prompts/v2/it_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/it_speaker_5.npz b/bark/assets/prompts/v2/it_speaker_5.npz

new file mode 100644

index 0000000..c8cce48

Binary files /dev/null and b/bark/assets/prompts/v2/it_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/it_speaker_6.npz b/bark/assets/prompts/v2/it_speaker_6.npz

new file mode 100644

index 0000000..3b086b3

Binary files /dev/null and b/bark/assets/prompts/v2/it_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/it_speaker_7.npz b/bark/assets/prompts/v2/it_speaker_7.npz

new file mode 100644

index 0000000..16f4b43

Binary files /dev/null and b/bark/assets/prompts/v2/it_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/it_speaker_8.npz b/bark/assets/prompts/v2/it_speaker_8.npz

new file mode 100644

index 0000000..682aad2

Binary files /dev/null and b/bark/assets/prompts/v2/it_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/it_speaker_9.npz b/bark/assets/prompts/v2/it_speaker_9.npz

new file mode 100644

index 0000000..09c60c3

Binary files /dev/null and b/bark/assets/prompts/v2/it_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/ja_speaker_0.npz b/bark/assets/prompts/v2/ja_speaker_0.npz

new file mode 100644

index 0000000..d2a8d56

Binary files /dev/null and b/bark/assets/prompts/v2/ja_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/ja_speaker_1.npz b/bark/assets/prompts/v2/ja_speaker_1.npz

new file mode 100644

index 0000000..96016df

Binary files /dev/null and b/bark/assets/prompts/v2/ja_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/ja_speaker_2.npz b/bark/assets/prompts/v2/ja_speaker_2.npz

new file mode 100644

index 0000000..3eeedc2

Binary files /dev/null and b/bark/assets/prompts/v2/ja_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/ja_speaker_3.npz b/bark/assets/prompts/v2/ja_speaker_3.npz

new file mode 100644

index 0000000..8037585

Binary files /dev/null and b/bark/assets/prompts/v2/ja_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/ja_speaker_4.npz b/bark/assets/prompts/v2/ja_speaker_4.npz

new file mode 100644

index 0000000..351a693

Binary files /dev/null and b/bark/assets/prompts/v2/ja_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/ja_speaker_5.npz b/bark/assets/prompts/v2/ja_speaker_5.npz

new file mode 100644

index 0000000..b4ab9e8

Binary files /dev/null and b/bark/assets/prompts/v2/ja_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/ja_speaker_6.npz b/bark/assets/prompts/v2/ja_speaker_6.npz

new file mode 100644

index 0000000..b56af0a

Binary files /dev/null and b/bark/assets/prompts/v2/ja_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/ja_speaker_7.npz b/bark/assets/prompts/v2/ja_speaker_7.npz

new file mode 100644

index 0000000..74d4b46

Binary files /dev/null and b/bark/assets/prompts/v2/ja_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/ja_speaker_8.npz b/bark/assets/prompts/v2/ja_speaker_8.npz

new file mode 100644

index 0000000..3f2d224

Binary files /dev/null and b/bark/assets/prompts/v2/ja_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/ja_speaker_9.npz b/bark/assets/prompts/v2/ja_speaker_9.npz

new file mode 100644

index 0000000..1a5a545

Binary files /dev/null and b/bark/assets/prompts/v2/ja_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/ko_speaker_0.npz b/bark/assets/prompts/v2/ko_speaker_0.npz

new file mode 100644

index 0000000..1d4eeda

Binary files /dev/null and b/bark/assets/prompts/v2/ko_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/ko_speaker_1.npz b/bark/assets/prompts/v2/ko_speaker_1.npz

new file mode 100644

index 0000000..90510ff

Binary files /dev/null and b/bark/assets/prompts/v2/ko_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/ko_speaker_2.npz b/bark/assets/prompts/v2/ko_speaker_2.npz

new file mode 100644

index 0000000..adc8774

Binary files /dev/null and b/bark/assets/prompts/v2/ko_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/ko_speaker_3.npz b/bark/assets/prompts/v2/ko_speaker_3.npz

new file mode 100644

index 0000000..42d9690

Binary files /dev/null and b/bark/assets/prompts/v2/ko_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/ko_speaker_4.npz b/bark/assets/prompts/v2/ko_speaker_4.npz

new file mode 100644

index 0000000..a486d79

Binary files /dev/null and b/bark/assets/prompts/v2/ko_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/ko_speaker_5.npz b/bark/assets/prompts/v2/ko_speaker_5.npz

new file mode 100644

index 0000000..b3c6857

Binary files /dev/null and b/bark/assets/prompts/v2/ko_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/ko_speaker_6.npz b/bark/assets/prompts/v2/ko_speaker_6.npz

new file mode 100644

index 0000000..c966005

Binary files /dev/null and b/bark/assets/prompts/v2/ko_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/ko_speaker_7.npz b/bark/assets/prompts/v2/ko_speaker_7.npz

new file mode 100644

index 0000000..9098a97

Binary files /dev/null and b/bark/assets/prompts/v2/ko_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/ko_speaker_8.npz b/bark/assets/prompts/v2/ko_speaker_8.npz

new file mode 100644

index 0000000..e5eb9ff

Binary files /dev/null and b/bark/assets/prompts/v2/ko_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/ko_speaker_9.npz b/bark/assets/prompts/v2/ko_speaker_9.npz

new file mode 100644

index 0000000..60086b0

Binary files /dev/null and b/bark/assets/prompts/v2/ko_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/pl_speaker_0.npz b/bark/assets/prompts/v2/pl_speaker_0.npz

new file mode 100644

index 0000000..03b8f5f

Binary files /dev/null and b/bark/assets/prompts/v2/pl_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/pl_speaker_1.npz b/bark/assets/prompts/v2/pl_speaker_1.npz

new file mode 100644

index 0000000..a33e3ba

Binary files /dev/null and b/bark/assets/prompts/v2/pl_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/pl_speaker_2.npz b/bark/assets/prompts/v2/pl_speaker_2.npz

new file mode 100644

index 0000000..b236952

Binary files /dev/null and b/bark/assets/prompts/v2/pl_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/pl_speaker_3.npz b/bark/assets/prompts/v2/pl_speaker_3.npz

new file mode 100644

index 0000000..04fab12

Binary files /dev/null and b/bark/assets/prompts/v2/pl_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/pl_speaker_4.npz b/bark/assets/prompts/v2/pl_speaker_4.npz

new file mode 100644

index 0000000..48dbf19

Binary files /dev/null and b/bark/assets/prompts/v2/pl_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/pl_speaker_5.npz b/bark/assets/prompts/v2/pl_speaker_5.npz

new file mode 100644

index 0000000..80fcbd9

Binary files /dev/null and b/bark/assets/prompts/v2/pl_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/pl_speaker_6.npz b/bark/assets/prompts/v2/pl_speaker_6.npz

new file mode 100644

index 0000000..46c86cd

Binary files /dev/null and b/bark/assets/prompts/v2/pl_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/pl_speaker_7.npz b/bark/assets/prompts/v2/pl_speaker_7.npz

new file mode 100644

index 0000000..49a7f20

Binary files /dev/null and b/bark/assets/prompts/v2/pl_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/pl_speaker_8.npz b/bark/assets/prompts/v2/pl_speaker_8.npz

new file mode 100644

index 0000000..8bb593d

Binary files /dev/null and b/bark/assets/prompts/v2/pl_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/pl_speaker_9.npz b/bark/assets/prompts/v2/pl_speaker_9.npz

new file mode 100644

index 0000000..7031a57

Binary files /dev/null and b/bark/assets/prompts/v2/pl_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/pt_speaker_0.npz b/bark/assets/prompts/v2/pt_speaker_0.npz

new file mode 100644

index 0000000..76aa02a

Binary files /dev/null and b/bark/assets/prompts/v2/pt_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/pt_speaker_1.npz b/bark/assets/prompts/v2/pt_speaker_1.npz

new file mode 100644

index 0000000..0119298

Binary files /dev/null and b/bark/assets/prompts/v2/pt_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/pt_speaker_2.npz b/bark/assets/prompts/v2/pt_speaker_2.npz

new file mode 100644

index 0000000..9e4e401

Binary files /dev/null and b/bark/assets/prompts/v2/pt_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/pt_speaker_3.npz b/bark/assets/prompts/v2/pt_speaker_3.npz

new file mode 100644

index 0000000..2198310

Binary files /dev/null and b/bark/assets/prompts/v2/pt_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/pt_speaker_4.npz b/bark/assets/prompts/v2/pt_speaker_4.npz

new file mode 100644

index 0000000..21c8f0e

Binary files /dev/null and b/bark/assets/prompts/v2/pt_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/pt_speaker_5.npz b/bark/assets/prompts/v2/pt_speaker_5.npz

new file mode 100644

index 0000000..3245b43

Binary files /dev/null and b/bark/assets/prompts/v2/pt_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/pt_speaker_6.npz b/bark/assets/prompts/v2/pt_speaker_6.npz

new file mode 100644

index 0000000..f705295

Binary files /dev/null and b/bark/assets/prompts/v2/pt_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/pt_speaker_7.npz b/bark/assets/prompts/v2/pt_speaker_7.npz

new file mode 100644

index 0000000..c60affd

Binary files /dev/null and b/bark/assets/prompts/v2/pt_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/pt_speaker_8.npz b/bark/assets/prompts/v2/pt_speaker_8.npz

new file mode 100644

index 0000000..64c9d8c

Binary files /dev/null and b/bark/assets/prompts/v2/pt_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/pt_speaker_9.npz b/bark/assets/prompts/v2/pt_speaker_9.npz

new file mode 100644

index 0000000..c7b891b

Binary files /dev/null and b/bark/assets/prompts/v2/pt_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/ru_speaker_0.npz b/bark/assets/prompts/v2/ru_speaker_0.npz

new file mode 100644

index 0000000..4af14b5

Binary files /dev/null and b/bark/assets/prompts/v2/ru_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/ru_speaker_1.npz b/bark/assets/prompts/v2/ru_speaker_1.npz

new file mode 100644

index 0000000..8ab3c7e

Binary files /dev/null and b/bark/assets/prompts/v2/ru_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/ru_speaker_2.npz b/bark/assets/prompts/v2/ru_speaker_2.npz

new file mode 100644

index 0000000..2bdfc39

Binary files /dev/null and b/bark/assets/prompts/v2/ru_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/ru_speaker_3.npz b/bark/assets/prompts/v2/ru_speaker_3.npz

new file mode 100644

index 0000000..32047d1

Binary files /dev/null and b/bark/assets/prompts/v2/ru_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/ru_speaker_4.npz b/bark/assets/prompts/v2/ru_speaker_4.npz

new file mode 100644

index 0000000..5c3f47a

Binary files /dev/null and b/bark/assets/prompts/v2/ru_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/ru_speaker_5.npz b/bark/assets/prompts/v2/ru_speaker_5.npz

new file mode 100644

index 0000000..6c73ed3

Binary files /dev/null and b/bark/assets/prompts/v2/ru_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/ru_speaker_6.npz b/bark/assets/prompts/v2/ru_speaker_6.npz

new file mode 100644

index 0000000..6171e1d

Binary files /dev/null and b/bark/assets/prompts/v2/ru_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/ru_speaker_7.npz b/bark/assets/prompts/v2/ru_speaker_7.npz

new file mode 100644

index 0000000..294c34d

Binary files /dev/null and b/bark/assets/prompts/v2/ru_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/ru_speaker_8.npz b/bark/assets/prompts/v2/ru_speaker_8.npz

new file mode 100644

index 0000000..abd95d8

Binary files /dev/null and b/bark/assets/prompts/v2/ru_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/ru_speaker_9.npz b/bark/assets/prompts/v2/ru_speaker_9.npz

new file mode 100644

index 0000000..06ab868

Binary files /dev/null and b/bark/assets/prompts/v2/ru_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/tr_speaker_0.npz b/bark/assets/prompts/v2/tr_speaker_0.npz

new file mode 100644

index 0000000..6d6be17

Binary files /dev/null and b/bark/assets/prompts/v2/tr_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/tr_speaker_1.npz b/bark/assets/prompts/v2/tr_speaker_1.npz

new file mode 100644

index 0000000..76a6f8d

Binary files /dev/null and b/bark/assets/prompts/v2/tr_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/tr_speaker_2.npz b/bark/assets/prompts/v2/tr_speaker_2.npz

new file mode 100644

index 0000000..09b86b1

Binary files /dev/null and b/bark/assets/prompts/v2/tr_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/tr_speaker_3.npz b/bark/assets/prompts/v2/tr_speaker_3.npz

new file mode 100644

index 0000000..4e64e64

Binary files /dev/null and b/bark/assets/prompts/v2/tr_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/tr_speaker_4.npz b/bark/assets/prompts/v2/tr_speaker_4.npz

new file mode 100644

index 0000000..2e238f6

Binary files /dev/null and b/bark/assets/prompts/v2/tr_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/tr_speaker_5.npz b/bark/assets/prompts/v2/tr_speaker_5.npz

new file mode 100644

index 0000000..ad62887

Binary files /dev/null and b/bark/assets/prompts/v2/tr_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/tr_speaker_6.npz b/bark/assets/prompts/v2/tr_speaker_6.npz

new file mode 100644

index 0000000..586d29f

Binary files /dev/null and b/bark/assets/prompts/v2/tr_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/tr_speaker_7.npz b/bark/assets/prompts/v2/tr_speaker_7.npz

new file mode 100644

index 0000000..64a8c61

Binary files /dev/null and b/bark/assets/prompts/v2/tr_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/tr_speaker_8.npz b/bark/assets/prompts/v2/tr_speaker_8.npz

new file mode 100644

index 0000000..f5a50cd

Binary files /dev/null and b/bark/assets/prompts/v2/tr_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/tr_speaker_9.npz b/bark/assets/prompts/v2/tr_speaker_9.npz

new file mode 100644

index 0000000..6c1b234

Binary files /dev/null and b/bark/assets/prompts/v2/tr_speaker_9.npz differ

diff --git a/bark/assets/prompts/v2/zh_speaker_0.npz b/bark/assets/prompts/v2/zh_speaker_0.npz

new file mode 100644

index 0000000..0bd0db1

Binary files /dev/null and b/bark/assets/prompts/v2/zh_speaker_0.npz differ

diff --git a/bark/assets/prompts/v2/zh_speaker_1.npz b/bark/assets/prompts/v2/zh_speaker_1.npz

new file mode 100644

index 0000000..8c593ef

Binary files /dev/null and b/bark/assets/prompts/v2/zh_speaker_1.npz differ

diff --git a/bark/assets/prompts/v2/zh_speaker_2.npz b/bark/assets/prompts/v2/zh_speaker_2.npz

new file mode 100644

index 0000000..eda3b26

Binary files /dev/null and b/bark/assets/prompts/v2/zh_speaker_2.npz differ

diff --git a/bark/assets/prompts/v2/zh_speaker_3.npz b/bark/assets/prompts/v2/zh_speaker_3.npz

new file mode 100644

index 0000000..d410990

Binary files /dev/null and b/bark/assets/prompts/v2/zh_speaker_3.npz differ

diff --git a/bark/assets/prompts/v2/zh_speaker_4.npz b/bark/assets/prompts/v2/zh_speaker_4.npz

new file mode 100644

index 0000000..a0417e8

Binary files /dev/null and b/bark/assets/prompts/v2/zh_speaker_4.npz differ

diff --git a/bark/assets/prompts/v2/zh_speaker_5.npz b/bark/assets/prompts/v2/zh_speaker_5.npz

new file mode 100644

index 0000000..e24abe3

Binary files /dev/null and b/bark/assets/prompts/v2/zh_speaker_5.npz differ

diff --git a/bark/assets/prompts/v2/zh_speaker_6.npz b/bark/assets/prompts/v2/zh_speaker_6.npz

new file mode 100644

index 0000000..efc598a

Binary files /dev/null and b/bark/assets/prompts/v2/zh_speaker_6.npz differ

diff --git a/bark/assets/prompts/v2/zh_speaker_7.npz b/bark/assets/prompts/v2/zh_speaker_7.npz

new file mode 100644

index 0000000..fdecb43

Binary files /dev/null and b/bark/assets/prompts/v2/zh_speaker_7.npz differ

diff --git a/bark/assets/prompts/v2/zh_speaker_8.npz b/bark/assets/prompts/v2/zh_speaker_8.npz

new file mode 100644

index 0000000..0b775c5

Binary files /dev/null and b/bark/assets/prompts/v2/zh_speaker_8.npz differ

diff --git a/bark/assets/prompts/v2/zh_speaker_9.npz b/bark/assets/prompts/v2/zh_speaker_9.npz

new file mode 100644

index 0000000..04fdfae

Binary files /dev/null and b/bark/assets/prompts/v2/zh_speaker_9.npz differ

diff --git a/bark/generation.py b/bark/generation.py

index 64b3c47..3769734 100644

--- a/bark/generation.py

+++ b/bark/generation.py

@@ -1,9 +1,7 @@

import contextlib

import gc

-import hashlib

import os

import re

-import requests

from encodec import EncodecModel

import funcy

@@ -72,8 +70,9 @@ SUPPORTED_LANGS = [

ALLOWED_PROMPTS = {"announcer"}

for _, lang in SUPPORTED_LANGS:

- for n in range(10):

- ALLOWED_PROMPTS.add(f"{lang}_speaker_{n}")

+ for prefix in ("", f"v2{os.path.sep}"):

+ for n in range(10):

+ ALLOWED_PROMPTS.add(f"{prefix}{lang}_speaker_{n}")

logger = logging.getLogger(__name__)

@@ -95,32 +94,26 @@ REMOTE_MODEL_PATHS = {

"text_small": {

"repo_id": "suno/bark",

"file_name": "text.pt",

- "checksum": "b3e42bcbab23b688355cd44128c4cdd3",

},

"coarse_small": {

"repo_id": "suno/bark",

"file_name": "coarse.pt",

- "checksum": "5fe964825e3b0321f9d5f3857b89194d",

},

"fine_small": {

"repo_id": "suno/bark",

"file_name": "fine.pt",

- "checksum": "5428d1befe05be2ba32195496e58dc90",

},

"text": {

"repo_id": "suno/bark",

"file_name": "text_2.pt",

- "checksum": "54afa89d65e318d4f5f80e8e8799026a",

},

"coarse": {

"repo_id": "suno/bark",

"file_name": "coarse_2.pt",

- "checksum": "8a98094e5e3a255a5c9c0ab7efe8fd28",

},

"fine": {

"repo_id": "suno/bark",

"file_name": "fine_2.pt",

- "checksum": "59d184ed44e3650774a2f0503a48a97b",

},

}

@@ -132,26 +125,6 @@ if not hasattr(torch.nn.functional, 'scaled_dot_product_attention') and torch.cu

)

-def _string_md5(s):

- m = hashlib.md5()

- m.update(s.encode("utf-8"))

- return m.hexdigest()

-

-

-def _md5(fname):

- hash_md5 = hashlib.md5()

- with open(fname, "rb") as f:

- for chunk in iter(lambda: f.read(4096), b""):

- hash_md5.update(chunk)

- return hash_md5.hexdigest()

-

-

-def _get_ckpt_path(model_type, use_small=False):

- model_key = f"{model_type}_small" if use_small or USE_SMALL_MODELS else model_type

- model_name = _string_md5(REMOTE_MODEL_PATHS[model_key]["file_name"])

- return os.path.join(CACHE_DIR, f"{model_name}.pt")

-

-

def _grab_best_device(use_gpu=True):

if torch.cuda.device_count() > 0 and use_gpu:

device = "cuda"

@@ -162,11 +135,17 @@ def _grab_best_device(use_gpu=True):

return device

-def _download(from_hf_path, file_name, to_local_path):

+def _get_ckpt_path(model_type, use_small=False):

+ key = model_type

+ if use_small:

+ key += "_small"

+ return os.path.join(CACHE_DIR, REMOTE_MODEL_PATHS[key]["file_name"])

+

+

+def _download(from_hf_path, file_name):

os.makedirs(CACHE_DIR, exist_ok=True)

- destination_file_name = to_local_path.split("/")[-1]

hf_hub_download(repo_id=from_hf_path, filename=file_name, local_dir=CACHE_DIR)

- os.replace(os.path.join(CACHE_DIR, file_name), to_local_path)

+

class InferenceContext:

def __init__(self, benchmark=False):

@@ -223,15 +202,9 @@ def _load_model(ckpt_path, device, use_small=False, model_type="text"):

raise NotImplementedError()

model_key = f"{model_type}_small" if use_small or USE_SMALL_MODELS else model_type

model_info = REMOTE_MODEL_PATHS[model_key]

- if (

- os.path.exists(ckpt_path) and

- _md5(ckpt_path) != model_info["checksum"]

- ):

- logger.warning(f"found outdated {model_type} model, removing.")

- os.remove(ckpt_path)

if not os.path.exists(ckpt_path):

logger.info(f"{model_type} model not found, downloading into `{CACHE_DIR}`.")

- _download(model_info["repo_id"], model_info["file_name"], ckpt_path)

+ _download(model_info["repo_id"], model_info["file_name"])

checkpoint = torch.load(ckpt_path, map_location=device)

# this is a hack

model_args = checkpoint["model_args"]

@@ -376,6 +349,25 @@ TEXT_PAD_TOKEN = 129_595

SEMANTIC_INFER_TOKEN = 129_599

+def _load_history_prompt(history_prompt_input):

+ if isinstance(history_prompt_input, str) and history_prompt_input.endswith(".npz"):

+ history_prompt = np.load(history_prompt_input)

+ elif isinstance(history_prompt_input, str):

+ if history_prompt_input not in ALLOWED_PROMPTS:

+ raise ValueError("history prompt not found")

+ history_prompt = np.load(

+ os.path.join(CUR_PATH, "assets", "prompts", f"{history_prompt_input}.npz")

+ )

+ elif isinstance(history_prompt_input, dict):

+ assert("semantic_prompt" in history_prompt_input)

+ assert("coarse_prompt" in history_prompt_input)

+ assert("fine_prompt" in history_prompt_input)

+ history_prompt = history_prompt_input

+ else:

+ raise ValueError("history prompt format unrecognized")

+ return history_prompt

+

+

def generate_text_semantic(

text,

history_prompt=None,

@@ -393,13 +385,8 @@ def generate_text_semantic(

text = _normalize_whitespace(text)

assert len(text.strip()) > 0

if history_prompt is not None:

- if history_prompt.endswith(".npz"):

- semantic_history = np.load(history_prompt)["semantic_prompt"]

- else:

- assert (history_prompt in ALLOWED_PROMPTS)

- semantic_history = np.load(

- os.path.join(CUR_PATH, "assets", "prompts", f"{history_prompt}.npz")

- )["semantic_prompt"]