Merge branch 'kubernetes:main' into expandcsivolume-ga

commit

439678c580

|

|

@ -32,9 +32,15 @@ The KMS v2 enhancement attempts to address all of these shortcomings, though not

|

|||

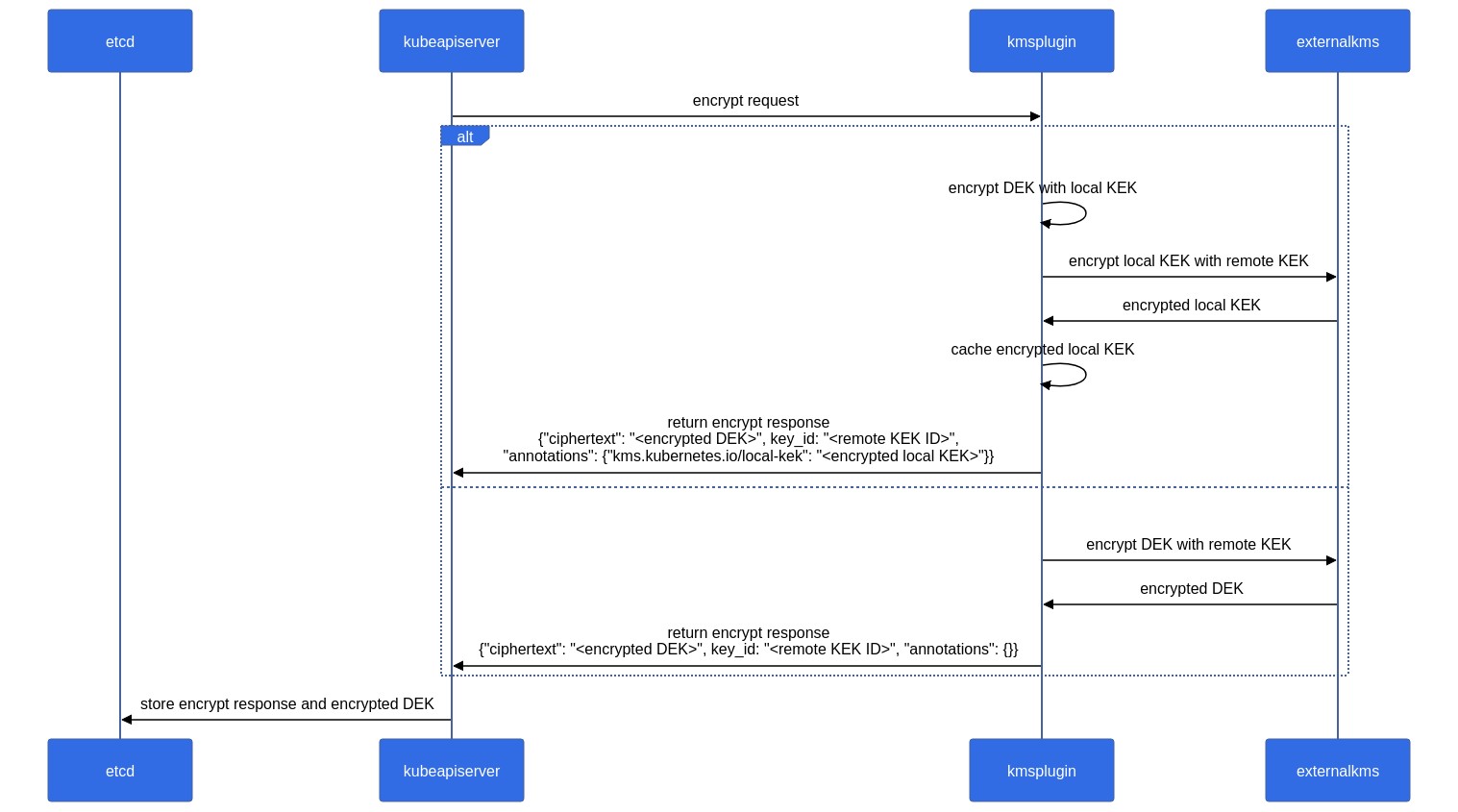

### Sequence Diagram

|

||||

|

||||

#### Encrypt Request

|

||||

[](https://mermaid.live/edit#pako:eNrNVD1v2zAQ_SsEC0GLkxgt2kEIvEQeCo8tOgkoTuTJIiyRypFMIwj67yUlxx-w0CLoUg0a7t29e3eP5MCFkcgzniSD0splbEhdjS2mGUtLsJiu2Bz4AaSgbNAGZGBpR6oF6p9MYyjmfvj08YvAzzH9CH3HV3eGq6qaqK6C6_U6HccxSQpt8dmjFpgr2BO0hWbh64CcEqoD7Rg6IW-jB18idMoivSAtwK3tGr9XeoHv1SFpaELKDF5R3W02p9qMBWHUd45RFGndnA-NY94qvWcH7FmtkIBE3c_gRPhGsEyWb3fsl3I1a4yAhu22u-XSC6Hn4lPNTEHYGofXHBd1iwJQ_q3zRY0AUeM7Ki93mQV5zpO-WKPtTHCcPZb0sGFDwYMnNVI8HAXPWMEfz53CmjYFX8Ul_1RyAs_Tsq_5BM5EBQetjQOnAnskCsxB1X1UQxod2ntlHibpdwc83LQ6DRU4x3GeDJugM5D-2eokYcITYThXJdbwogy9w8z8H23M_xcbbg04rVHL5VsWr3XGrDOEt8JAy6Ux45-veIvUgpLh8RpipODTOzUrl1iBb8IYhR5Dqu8kONxKFfrwrIJg6oqDd-ZbrwXPHHl8Szo-QMes8Tffb72O)

|

||||

|

||||

<!-- source - https://mermaid.ink/img/pako:eNrNVD1v2zAQ_SsEC0GLkxgt2kEIvEQeCo8tOgkoTuTJIiyRypFMIwj67yUlxx-w0CLoUg0a7t29e3eP5MCFkcgzniSD0splbEhdjS2mGUtLsJiu2Bz4AaSgbNAGZGBpR6oF6p9MYyjmfvj08YvAzzH9CH3HV3eGq6qaqK6C6_U6HccxSQpt8dmjFpgr2BO0hWbh64CcEqoD7Rg6IW-jB18idMoivSAtwK3tGr9XeoHv1SFpaELKDF5R3W02p9qMBWHUd45RFGndnA-NY94qvWcH7FmtkIBE3c_gRPhGsEyWb3fsl3I1a4yAhu22u-XSC6Hn4lPNTEHYGofXHBd1iwJQ_q3zRY0AUeM7Ki93mQV5zpO-WKPtTHCcPZb0sGFDwYMnNVI8HAXPWMEfz53CmjYFX8Ul_1RyAs_Tsq_5BM5EBQetjQOnAnskCsxB1X1UQxod2ntlHibpdwc83LQ6DRU4x3GeDJugM5D-2eokYcITYThXJdbwogy9w8z8H23M_xcbbg04rVHL5VsWr3XGrDOEt8JAy6Ux45-veIvUgpLh8RpipODTOzUrl1iBb8IYhR5Dqu8kONxKFfrwrIJg6oqDd-ZbrwXPHHl8Szo-QMes8Tffb72O)](https://mermaid.live/edit#pako:eNrNVD1v2zAQ_SsEC0GLkxgt2kEIvEQeCo8tOgkoTuTJIiyRypFMIwj67yUlxx-w0CLoUg0a7t29e3eP5MCFkcgzniSD0splbEhdjS2mGUtLsJiu2Bz4AaSgbNAGZGBpR6oF6p9MYyjmfvj08YvAzzH9CH3HV3eGq6qaqK6C6_U6HccxSQpt8dmjFpgr2BO0hWbh64CcEqoD7Rg6IW-jB18idMoivSAtwK3tGr9XeoHv1SFpaELKDF5R3W02p9qMBWHUd45RFGndnA-NY94qvWcH7FmtkIBE3c_gRPhGsEyWb3fsl3I1a4yAhu22u-XSC6Hn4lPNTEHYGofXHBd1iwJQ_q3zRY0AUeM7Ki93mQV5zpO-WKPtTHCcPZb0sGFDwYMnNVI8HAXPWMEfz53CmjYFX8Ul_1RyAs_Tsq_5BM5EBQetjQOnAnskCsxB1X1UQxod2ntlHibpdwc83LQ6DRU4x3GeDJugM5D-2eokYcITYThXJdbwogy9w8z8H23M_xcbbg04rVHL5VsWr3XGrDOEt8JAy6Ux45-veIvUgpLh8RpipODTOzUrl1iBb8IYhR5Dqu8kONxKFfrwrIJg6oqDd-ZbrwXPHHl8Szo-QMes8Tffb72O -->

|

||||

|

||||

{{< figure src="/images/blog/2022-09-09-kubernetes-1.25-kms-v2-improvements/kubernetes-1.25-encryption.svg" alt="Sequence diagram for KMSv2 Encrypt" class="diagram-large" >}}

|

||||

|

||||

#### Decrypt Request

|

||||

[](https://mermaid.live/edit#pako:eNrVVU2P0zAQ_SsjoyggdXcrEHuIVr3QHlBvgDhFQtN40lhN7GA7C1GU_47jdOuUhi4HkKCn1DPzPkbPcscyxYklLIo6IYVNoIttQRXFCcQ7NBQvYDz4jFrgriTjKh3EtRYV6vadKpUeel-8eX2f0duh_Vj6RN9tKOd57qHODpfLZdz3fRSl0tDXhmRGa4F7jVUqwf1q1FZkokZp4dDsCGthSD-SnilXpi6bvZCXJUdJWmLpWsZiFIHIoVQZlrDdbEFIQCmVRSuUNAtwfiU0Rsg9FII06qxox0ksHZzMdFtb4lME8xPI2A7nqm9Wq5PMBDh5HNCDc2PhYafvVtClzMkuSA-bSlkCKXsIjOvNdpWyBRyo_SK4L46fsFfWOtVovHVQOWzGqQ9kaieI_NzIkbKpUsfhSJ2w20GslmTJ3Ap1593dHOhwoeLk22H2_ZPVK9uRkGFWUOj0q3laxfxanFX4JmwRcMI4lYZmmZyr32CbBCLwBRDPqqlSls5pPXWYndU9lfPH_F4Z91avk5Pk4c8ZzDScibNsGy0nuRyDE4JZlyjkJJeBdSaXYYHwfv2Xw_fLPLh7eYzEzN38b27n9I49m-P1ZYLhpcGKYEcFPgqlBxlWcWxfTTLyfKzX00z9gzE6hUFytmAV6QoFdy9bNxynzD9iIyOnHJvS0aeyd61NzdHShgurNEtydGFaMGys-tjKjCVWN_TUdHydjl39D0CLbdk)

|

||||

|

||||

<!-- source - https://mermaid.ink/img/pako:eNrNVD1v2zAQ_SsEC0GLkxgt2kEIvEQeCo8tOgkoTuTJIiyRypFMIwj67yUlxx-w0CLoUg0a7t29e3eP5MCFkcgzniSD0splbEhdjS2mGUtLsJiu2Bz4AaSgbNAGZGBpR6oF6p9MYyjmfvj08YvAzzH9CH3HV3eGq6qaqK6C6_U6HccxSQpt8dmjFpgr2BO0hWbh64CcEqoD7Rg6IW-jB18idMoivSAtwK3tGr9XeoHv1SFpaELKDF5R3W02p9qMBWHUd45RFGndnA-NY94qvWcH7FmtkIBE3c_gRPhGsEyWb3fsl3I1a4yAhu22u-XSC6Hn4lPNTEHYGofXHBd1iwJQ_q3zRY0AUeM7Ki93mQV5zpO-WKPtTHCcPZb0sGFDwYMnNVI8HAXPWMEfz53CmjYFX8Ul_1RyAs_Tsq_5BM5EBQetjQOnAnskCsxB1X1UQxod2ntlHibpdwc83LQ6DRU4x3GeDJugM5D-2eokYcITYThXJdbwogy9w8z8H23M_xcbbg04rVHL5VsWr3XGrDOEt8JAy6Ux45-veIvUgpLh8RpipODTOzUrl1iBb8IYhR5Dqu8kONxKFfrwrIJg6oqDd-ZbrwXPHHl8Szo-QMes8Tffb72O)](https://mermaid.live/edit#pako:eNrNVD1v2zAQ_SsEC0GLkxgt2kEIvEQeCo8tOgkoTuTJIiyRypFMIwj67yUlxx-w0CLoUg0a7t29e3eP5MCFkcgzniSD0splbEhdjS2mGUtLsJiu2Bz4AaSgbNAGZGBpR6oF6p9MYyjmfvj08YvAzzH9CH3HV3eGq6qaqK6C6_U6HccxSQpt8dmjFpgr2BO0hWbh64CcEqoD7Rg6IW-jB18idMoivSAtwK3tGr9XeoHv1SFpaELKDF5R3W02p9qMBWHUd45RFGndnA-NY94qvWcH7FmtkIBE3c_gRPhGsEyWb3fsl3I1a4yAhu22u-XSC6Hn4lPNTEHYGofXHBd1iwJQ_q3zRY0AUeM7Ki93mQV5zpO-WKPtTHCcPZb0sGFDwYMnNVI8HAXPWMEfz53CmjYFX8Ul_1RyAs_Tsq_5BM5EBQetjQOnAnskCsxB1X1UQxod2ntlHibpdwc83LQ6DRU4x3GeDJugM5D-2eokYcITYThXJdbwogy9w8z8H23M_xcbbg04rVHL5VsWr3XGrDOEt8JAy6Ux45-veIvUgpLh8RpipODTOzUrl1iBb8IYhR5Dqu8kONxKFfrwrIJg6oqDd-ZbrwXPHHl8Szo-QMes8Tffb72O ](https://mermaid.ink/img/pako:eNrVVU2P0zAQ_SsjoyggdXcrEHuIVr3QHlBvgDhFQtN40lhN7GA7C1GU_47jdOuUhi4HkKCn1DPzPkbPcscyxYklLIo6IYVNoIttQRXFCcQ7NBQvYDz4jFrgriTjKh3EtRYV6vadKpUeel-8eX2f0duh_Vj6RN9tKOd57qHODpfLZdz3fRSl0tDXhmRGa4F7jVUqwf1q1FZkokZp4dDsCGthSD-SnilXpi6bvZCXJUdJWmLpWsZiFIHIoVQZlrDdbEFIQCmVRSuUNAtwfiU0Rsg9FII06qxox0ksHZzMdFtb4lME8xPI2A7nqm9Wq5PMBDh5HNCDc2PhYafvVtClzMkuSA-bSlkCKXsIjOvNdpWyBRyo_SK4L46fsFfWOtVovHVQOWzGqQ9kaieI_NzIkbKpUsfhSJ2w20GslmTJ3Ap1593dHOhwoeLk22H2_ZPVK9uRkGFWUOj0q3laxfxanFX4JmwRcMI4lYZmmZyr32CbBCLwBRDPqqlSls5pPXWYndU9lfPH_F4Z91avk5Pk4c8ZzDScibNsGy0nuRyDE4JZlyjkJJeBdSaXYYHwfv2Xw_fLPLh7eYzEzN38b27n9I49m-P1ZYLhpcGKYEcFPgqlBxlWcWxfTTLyfKzX00z9gzE6hUFytmAV6QoFdy9bNxynzD9iIyOnHJvS0aeyd61NzdHShgurNEtydGFaMGys-tjKjCVWN_TUdHydjl39D0CLbdk)](https://mermaid.live/edit#pako:eNrVVU2P0zAQ_SsjoyggdXcrEHuIVr3QHlBvgDhFQtN40lhN7GA7C1GU_47jdOuUhi4HkKCn1DPzPkbPcscyxYklLIo6IYVNoIttQRXFCcQ7NBQvYDz4jFrgriTjKh3EtRYV6vadKpUeel-8eX2f0duh_Vj6RN9tKOd57qHODpfLZdz3fRSl0tDXhmRGa4F7jVUqwf1q1FZkokZp4dDsCGthSD-SnilXpi6bvZCXJUdJWmLpWsZiFIHIoVQZlrDdbEFIQCmVRSuUNAtwfiU0Rsg9FII06qxox0ksHZzMdFtb4lME8xPI2A7nqm9Wq5PMBDh5HNCDc2PhYafvVtClzMkuSA-bSlkCKXsIjOvNdpWyBRyo_SK4L46fsFfWOtVovHVQOWzGqQ9kaieI_NzIkbKpUsfhSJ2w20GslmTJ3Ap1593dHOhwoeLk22H2_ZPVK9uRkGFWUOj0q3laxfxanFX4JmwRcMI4lYZmmZyr32CbBCLwBRDPqqlSls5pPXWYndU9lfPH_F4Z91avk5Pk4c8ZzDScibNsGy0nuRyDE4JZlyjkJJeBdSaXYYHwfv2Xw_fLPLh7eYzEzN38b27n9I49m-P1ZYLhpcGKYEcFPgqlBxlWcWxfTTLyfKzX00z9gzE6hUFytmAV6QoFdy9bNxynzD9iIyOnHJvS0aeyd61NzdHShgurNEtydGFaMGys-tjKjCVWN_TUdHydjl39D0CLbdk -->

|

||||

{{< figure src="/images/blog/2022-09-09-kubernetes-1.25-kms-v2-improvements/kubernetes-1.25-decryption.svg" alt="Sequence diagram for KMSv2 Decrypt" class="diagram-large" >}}

|

||||

|

||||

## What’s next?

|

||||

For Kubernetes v1.26, we expect to ship another alpha version. As of right now, the alpha API will be ready to be used by KMS plugin authors. We hope to include a reference plugin implementation with the next release, and you'll be able to try out the feature at that time.

|

||||

|

|

@ -47,4 +53,4 @@ If you are interested in getting involved in the development of this feature or

|

|||

You are also welcome to join the bi-weekly [SIG Auth meetings](https://github.com/kubernetes/community/blob/master/sig-auth/README.md#meetings), held every-other Wednesday.

|

||||

|

||||

## Acknowledgements

|

||||

This feature has been an effort driven by contributors from several different companies. We would like to extend a huge thank you to everyone that contributed their time and effort to help make this possible.

|

||||

This feature has been an effort driven by contributors from several different companies. We would like to extend a huge thank you to everyone that contributed their time and effort to help make this possible.

|

||||

|

|

|

|||

|

|

@ -28,52 +28,49 @@ Kubernetes Project {{< glossary_tooltip text="Contributors" term_id="contributor

|

|||

find it useful as an introduction to what extension points and patterns exist, and their

|

||||

trade-offs and limitations.

|

||||

|

||||

Customization approaches can be broadly divided into [configuration](#configuration), which only

|

||||

involves changing command line arguments, local configuration files, or API resources; and [extensions](#extensions),

|

||||

which involve running additional programs, additional network services, or both.

|

||||

This document is primarily about _extensions_.

|

||||

|

||||

<!-- body -->

|

||||

|

||||

## Overview

|

||||

|

||||

Customization approaches can be broadly divided into *configuration*, which only involves changing

|

||||

flags, local configuration files, or API resources; and *extensions*, which involve running

|

||||

additional programs or services. This document is primarily about extensions.

|

||||

|

||||

## Configuration

|

||||

|

||||

*Configuration files* and *flags* are documented in the Reference section of the online

|

||||

documentation, under each binary:

|

||||

*Configuration files* and *command arguments* are documented in the [Reference](/docs/reference/) section of the online

|

||||

documentation, with a page for each binary:

|

||||

|

||||

* [kubelet](/docs/reference/command-line-tools-reference/kubelet/)

|

||||

* [kube-proxy](/docs/reference/command-line-tools-reference/kube-proxy/)

|

||||

* [kube-apiserver](/docs/reference/command-line-tools-reference/kube-apiserver/)

|

||||

* [kube-controller-manager](/docs/reference/command-line-tools-reference/kube-controller-manager/)

|

||||

* [kube-scheduler](/docs/reference/command-line-tools-reference/kube-scheduler/).

|

||||

* [`kube-apiserver`](/docs/reference/command-line-tools-reference/kube-apiserver/)

|

||||

* [`kube-controller-manager`](/docs/reference/command-line-tools-reference/kube-controller-manager/)

|

||||

* [`kube-scheduler`](/docs/reference/command-line-tools-reference/kube-scheduler/)

|

||||

* [`kubelet`](/docs/reference/command-line-tools-reference/kubelet/)

|

||||

* [`kube-proxy`](/docs/reference/command-line-tools-reference/kube-proxy/)

|

||||

|

||||

Flags and configuration files may not always be changeable in a hosted Kubernetes service or a

|

||||

Command arguments and configuration files may not always be changeable in a hosted Kubernetes service or a

|

||||

distribution with managed installation. When they are changeable, they are usually only changeable

|

||||

by the cluster administrator. Also, they are subject to change in future Kubernetes versions, and

|

||||

by the cluster operator. Also, they are subject to change in future Kubernetes versions, and

|

||||

setting them may require restarting processes. For those reasons, they should be used only when

|

||||

there are no other options.

|

||||

|

||||

*Built-in Policy APIs*, such as [ResourceQuota](/docs/concepts/policy/resource-quotas/),

|

||||

[PodSecurityPolicies](/docs/concepts/security/pod-security-policy/),

|

||||

Built-in *policy APIs*, such as [ResourceQuota](/docs/concepts/policy/resource-quotas/),

|

||||

[NetworkPolicy](/docs/concepts/services-networking/network-policies/) and Role-based Access Control

|

||||

([RBAC](/docs/reference/access-authn-authz/rbac/)), are built-in Kubernetes APIs.

|

||||

APIs are typically used with hosted Kubernetes services and with managed Kubernetes installations.

|

||||

They are declarative and use the same conventions as other Kubernetes resources like pods,

|

||||

so new cluster configuration can be repeatable and be managed the same way as applications.

|

||||

And, where they are stable, they enjoy a

|

||||

([RBAC](/docs/reference/access-authn-authz/rbac/)), are built-in Kubernetes APIs that provide declaratively configured policy settings.

|

||||

APIs are typically usable even with hosted Kubernetes services and with managed Kubernetes installations.

|

||||

The built-in policy APIs follow the same conventions as other Kubernetes resources such as Pods.

|

||||

When you use a policy APIs that is [stable](/docs/reference/using-api/#api-versioning), you benefit from a

|

||||

[defined support policy](/docs/reference/using-api/deprecation-policy/) like other Kubernetes APIs.

|

||||

For these reasons, they are preferred over *configuration files* and *flags* where suitable.

|

||||

For these reasons, policy APIs are recommended over *configuration files* and *command arguments* where suitable.

|

||||

|

||||

## Extensions

|

||||

|

||||

Extensions are software components that extend and deeply integrate with Kubernetes.

|

||||

They adapt it to support new types and new kinds of hardware.

|

||||

|

||||

Many cluster administrators use a hosted or distribution instance of Kubernetes.

|

||||

These clusters come with extensions pre-installed. As a result, most Kubernetes

|

||||

Many cluster administrators use a hosted or distribution instance of Kubernetes.

|

||||

These clusters come with extensions pre-installed. As a result, most Kubernetes

|

||||

users will not need to install extensions and even fewer users will need to author new ones.

|

||||

|

||||

## Extension Patterns

|

||||

### Extension patterns

|

||||

|

||||

Kubernetes is designed to be automated by writing client programs. Any

|

||||

program that reads and/or writes to the Kubernetes API can provide useful

|

||||

|

|

@ -83,127 +80,163 @@ Automation generally works with any Kubernetes cluster, including hosted

|

|||

clusters and managed installations.

|

||||

|

||||

There is a specific pattern for writing client programs that work well with

|

||||

Kubernetes called the *Controller* pattern. Controllers typically read an

|

||||

object's `.spec`, possibly do things, and then update the object's `.status`.

|

||||

Kubernetes called the {{< glossary_tooltip term_id="controller" text="controller" >}}

|

||||

pattern. Controllers typically read an object's `.spec`, possibly do things, and then

|

||||

update the object's `.status`.

|

||||

|

||||

A controller is a client of Kubernetes. When Kubernetes is the client and calls out to a remote

|

||||

service, it is called a *Webhook*. The remote service is called a *Webhook Backend*. Like

|

||||

Controllers, Webhooks do add a point of failure.

|

||||

A controller is a client of the Kubernetes API. When Kubernetes is the client and calls

|

||||

out to a remote service, Kubernetes calls this a *webhook*. The remote service is called

|

||||

a *webhook backend*. As with custom controllers, webhooks do add a point of failure.

|

||||

|

||||

{{< note >}}

|

||||

Outside of Kubernetes, the term “webhook” typically refers to a mechanism for asynchronous

|

||||

notifications, where the webhook call serves as a one-way notification to another system or

|

||||

component. In the Kubernetes ecosystem, even synchronous HTTP callouts are often

|

||||

described as “webhooks”.

|

||||

{{< /note >}}

|

||||

|

||||

In the webhook model, Kubernetes makes a network request to a remote service.

|

||||

In the *Binary Plugin* model, Kubernetes executes a binary (program).

|

||||

Binary plugins are used by the kubelet (e.g.

|

||||

[Flex Volume Plugins](/docs/concepts/storage/volumes/#flexvolume)

|

||||

and [Network Plugins](/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins/))

|

||||

and by kubectl.

|

||||

With the alternative *binary Plugin* model, Kubernetes executes a binary (program).

|

||||

Binary plugins are used by the kubelet (for example, [CSI storage plugins](https://kubernetes-csi.github.io/docs/)

|

||||

and [CNI network plugins](/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins/)),

|

||||

and by kubectl (see [Extend kubectl with plugins](/docs/tasks/extend-kubectl/kubectl-plugins/)).

|

||||

|

||||

Below is a diagram showing how the extension points interact with the

|

||||

Kubernetes control plane.

|

||||

### Extension points

|

||||

|

||||

<!-- image source drawing https://docs.google.com/drawings/d/1muJ7Oxuj_7Gtv7HV9-2zJbOnkQJnjxq-v1ym_kZfB-4/edit?ts=5a01e054 -->

|

||||

|

||||

This diagram shows the extension points in a Kubernetes cluster and the

|

||||

clients that access it.

|

||||

|

||||

## Extension Points

|

||||

<!-- image source: https://docs.google.com/drawings/d/1k2YdJgNTtNfW7_A8moIIkij-DmVgEhNrn3y2OODwqQQ/view -->

|

||||

|

||||

This diagram shows the extension points in a Kubernetes system.

|

||||

{{< figure src="/docs/concepts/extend-kubernetes/extension-points.png"

|

||||

alt="Symbolic representation of seven numbered extension points for Kubernetes"

|

||||

class="diagram-large" caption="Kubernetes extension points" >}}

|

||||

|

||||

<!-- image source diagrams: https://docs.google.com/drawings/d/1k2YdJgNTtNfW7_A8moIIkij-DmVgEhNrn3y2OODwqQQ/view -->

|

||||

|

||||

#### Key to the figure

|

||||

|

||||

1. Users often interact with the Kubernetes API using `kubectl`.

|

||||

[Kubectl plugins](/docs/tasks/extend-kubectl/kubectl-plugins/) extend the kubectl binary.

|

||||

They only affect the individual user's local environment, and so cannot enforce site-wide policies.

|

||||

1. Users often interact with the Kubernetes API using `kubectl`. [Plugins](#client-extensions)

|

||||

customise the behaviour of clients. There are generic extensions that can apply to different clients,

|

||||

as well as specific ways to extend `kubectl`.

|

||||

|

||||

1. The API server handles all requests. Several types of extension points in the API server allow

|

||||

authenticating requests, or blocking them based on their content, editing content, and handling

|

||||

deletion. These are described in the [API Access Extensions](#api-access-extensions) section.

|

||||

|

||||

1. The API server serves various kinds of *resources*. *Built-in resource kinds*, like `pods`, are

|

||||

defined by the Kubernetes project and can't be changed. You can also add resources that you

|

||||

define, or that other projects have defined, called *Custom Resources*, as explained in the

|

||||

[Custom Resources](#user-defined-types) section. Custom Resources are often used with API access

|

||||

extensions.

|

||||

1. The API server serves various kinds of *resources*. *Built-in resource kinds*, such as

|

||||

`pods`, are defined by the Kubernetes project and can't be changed.

|

||||

Read [API extensions](#api-extensions) to learn about extending the Kubernetes API.

|

||||

|

||||

1. The Kubernetes scheduler decides which nodes to place pods on. There are several ways to extend

|

||||

scheduling. These are described in the [Scheduler Extensions](#scheduler-extensions) section.

|

||||

1. The Kubernetes scheduler [decides](/docs/concepts/scheduling-eviction/assign-pod-node/)

|

||||

which nodes to place pods on. There are several ways to extend scheduling, which are

|

||||

described in the [scheduler extensions](#scheduler-extensions) section.

|

||||

|

||||

1. Much of the behavior of Kubernetes is implemented by programs called Controllers which are

|

||||

clients of the API server. Controllers are often used in conjunction with Custom Resources.

|

||||

1. Much of the behavior of Kubernetes is implemented by programs called

|

||||

{{< glossary_tooltip term_id="controller" text="controllers" >}}, that are

|

||||

clients of the API server. Controllers are often used in conjunction with custom resources.

|

||||

Read [combining new APIs with automation](#combining-new-apis-with-automation) and

|

||||

[Changing built-in resources](#changing-built-in-resources) to learn more.

|

||||

|

||||

1. The kubelet runs on servers, and helps pods appear like virtual servers with their own IPs on

|

||||

1. The kubelet runs on servers (nodes), and helps pods appear like virtual servers with their own IPs on

|

||||

the cluster network. [Network Plugins](#network-plugins) allow for different implementations of

|

||||

pod networking.

|

||||

|

||||

1. The kubelet also mounts and unmounts volumes for containers. New types of storage can be

|

||||

supported via [Storage Plugins](#storage-plugins).

|

||||

1. You can use [Device Plugins](#device-plugins) to integrate custom hardware or other special

|

||||

node-local facilities, and make these available to Pods running in your cluster. The kubelet

|

||||

includes support for working with device plugins.

|

||||

|

||||

The kubelet also mounts and unmounts

|

||||

{{< glossary_tooltip text="volume" term_id="volume" >}} for pods and their containers.

|

||||

You can use [Storage Plugins](#storage-plugins) to add support for new kinds

|

||||

of storage and other volume types.

|

||||

|

||||

|

||||

#### Extension point choice flowchart {#extension-flowchart}

|

||||

|

||||

If you are unsure where to start, this flowchart can help. Note that some solutions may involve

|

||||

several types of extensions.

|

||||

|

||||

<!-- image source drawing: https://docs.google.com/drawings/d/1sdviU6lDz4BpnzJNHfNpQrqI9F19QZ07KnhnxVrp2yg/edit -->

|

||||

|

||||

<!-- image source for flowchart: https://docs.google.com/drawings/d/1sdviU6lDz4BpnzJNHfNpQrqI9F19QZ07KnhnxVrp2yg/edit -->

|

||||

|

||||

## API Extensions

|

||||

{{< figure src="/docs/concepts/extend-kubernetes/flowchart.png"

|

||||

alt="Flowchart with questions about use cases and guidance for implementers. Green circles indicate yes; red circles indicate no."

|

||||

class="diagram-large" caption="Flowchart guide to select an extension approach" >}}

|

||||

|

||||

### User-Defined Types

|

||||

---

|

||||

|

||||

Consider adding a Custom Resource to Kubernetes if you want to define new controllers, application

|

||||

## Client extensions

|

||||

|

||||

Plugins for kubectl are separate binaries that add or replace the behavior of specific subcommands.

|

||||

The `kubectl` tool can also integrate with [credential plugins](/docs/reference/access-authn-authz/authentication/#client-go-credential-plugins)

|

||||

These extensions only affect a individual user's local environment, and so cannot enforce site-wide policies.

|

||||

|

||||

If you want to extend the `kubectl` tool, read [Extend kubectl with plugins](/docs/tasks/extend-kubectl/kubectl-plugins/).

|

||||

|

||||

## API extensions

|

||||

|

||||

### Custom resource definitions

|

||||

|

||||

Consider adding a _Custom Resource_ to Kubernetes if you want to define new controllers, application

|

||||

configuration objects or other declarative APIs, and to manage them using Kubernetes tools, such

|

||||

as `kubectl`.

|

||||

|

||||

Do not use a Custom Resource as data storage for application, user, or monitoring data.

|

||||

|

||||

For more about Custom Resources, see the

|

||||

[Custom Resources concept guide](/docs/concepts/extend-kubernetes/api-extension/custom-resources/).

|

||||

[Custom Resources](/docs/concepts/extend-kubernetes/api-extension/custom-resources/) concept guide.

|

||||

|

||||

### API aggregation layer

|

||||

|

||||

### Combining New APIs with Automation

|

||||

You can use Kubernetes' [API Aggregation Layer](/docs/concepts/extend-kubernetes/api-extension/apiserver-aggregation/)

|

||||

to integrate the Kubernetes API with additional services such as for [metrics](/docs/tasks/debug/debug-cluster/resource-metrics-pipeline/).

|

||||

|

||||

The combination of a custom resource API and a control loop is called the

|

||||

[Operator pattern](/docs/concepts/extend-kubernetes/operator/). The Operator pattern is used to manage

|

||||

specific, usually stateful, applications. These custom APIs and control loops can also be used to

|

||||

control other resources, such as storage or policies.

|

||||

### Combining new APIs with automation

|

||||

|

||||

### Changing Built-in Resources

|

||||

A combination of a custom resource API and a control loop is called the

|

||||

{{< glossary_tooltip term_id="controller" text="controllers" >}} pattern. If your controller takes

|

||||

the place of a human operator deploying infrastructure based on a desired state, then the controller

|

||||

may also be following the {{< glossary_tooltip text="operator pattern" term_id="operator-pattern" >}}.

|

||||

The Operator pattern is used to manage specific applications; usually, these are applications that

|

||||

maintain state and require care in how they are managed.

|

||||

|

||||

You can also make your own custom APIs and control loops that manage other resources, such as storage,

|

||||

or to define policies (such as an access control restriction).

|

||||

|

||||

### Changing built-in resources

|

||||

|

||||

When you extend the Kubernetes API by adding custom resources, the added resources always fall

|

||||

into a new API Groups. You cannot replace or change existing API groups.

|

||||

Adding an API does not directly let you affect the behavior of existing APIs (e.g. Pods), but API

|

||||

Access Extensions do.

|

||||

Adding an API does not directly let you affect the behavior of existing APIs (such as Pods), whereas

|

||||

_API Access Extensions_ do.

|

||||

|

||||

## API access extensions

|

||||

|

||||

### API Access Extensions

|

||||

|

||||

When a request reaches the Kubernetes API Server, it is first Authenticated, then Authorized, then

|

||||

subject to various types of Admission Control. See

|

||||

When a request reaches the Kubernetes API Server, it is first _authenticated_, then _authorized_,

|

||||

and is then subject to various types of _admission control_ (some requests are in fact not

|

||||

authenticated, and get special treatment). See

|

||||

[Controlling Access to the Kubernetes API](/docs/concepts/security/controlling-access/)

|

||||

for more on this flow.

|

||||

|

||||

Each of these steps offers extension points.

|

||||

|

||||

Kubernetes has several built-in authentication methods that it supports. It can also sit behind an

|

||||

authenticating proxy, and it can send a token from an Authorization header to a remote service for

|

||||

verification (a webhook). All of these methods are covered in the

|

||||

[Authentication documentation](/docs/reference/access-authn-authz/authentication/).

|

||||

Each of the steps in the Kubernetes authentication / authorization flow offers extension points.

|

||||

|

||||

### Authentication

|

||||

|

||||

[Authentication](/docs/reference/access-authn-authz/authentication/) maps headers or certificates

|

||||

in all requests to a username for the client making the request.

|

||||

|

||||

Kubernetes provides several built-in authentication methods, and an

|

||||

[Authentication webhook](/docs/reference/access-authn-authz/authentication/#webhook-token-authentication)

|

||||

method if those don't meet your needs.

|

||||

Kubernetes has several built-in authentication methods that it supports. It can also sit behind an

|

||||

authenticating proxy, and it can send a token from an `Authorization:` header to a remote service for

|

||||

verification (an [authentication webhook](/docs/reference/access-authn-authz/authentication/#webhook-token-authentication))

|

||||

if those don't meet your needs.

|

||||

|

||||

### Authorization

|

||||

|

||||

[Authorization](/docs/reference/access-authn-authz/authorization/) determines whether specific

|

||||

users can read, write, and do other operations on API resources. It works at the level of whole

|

||||

resources -- it doesn't discriminate based on arbitrary object fields. If the built-in

|

||||

authorization options don't meet your needs, [Authorization webhook](/docs/reference/access-authn-authz/webhook/)

|

||||

allows calling out to user-provided code to make an authorization decision.

|

||||

resources -- it doesn't discriminate based on arbitrary object fields.

|

||||

|

||||

### Dynamic Admission Control

|

||||

If the built-in authorization options don't meet your needs, an

|

||||

[authorization webhook](/docs/reference/access-authn-authz/webhook/)

|

||||

allows calling out to custom code that makes an authorization decision.

|

||||

|

||||

### Dynamic admission control

|

||||

|

||||

After a request is authorized, if it is a write operation, it also goes through

|

||||

[Admission Control](/docs/reference/access-authn-authz/admission-controllers/) steps.

|

||||

|

|

@ -213,33 +246,44 @@ In addition to the built-in steps, there are several extensions:

|

|||

restricts what images can be run in containers.

|

||||

* To make arbitrary admission control decisions, a general

|

||||

[Admission webhook](/docs/reference/access-authn-authz/extensible-admission-controllers/#admission-webhooks)

|

||||

can be used. Admission Webhooks can reject creations or updates.

|

||||

can be used. Admission webhooks can reject creations or updates.

|

||||

Some admission webhooks modify the incoming request data before it is handled further by Kubernetes.

|

||||

|

||||

## Infrastructure Extensions

|

||||

## Infrastructure extensions

|

||||

|

||||

### Storage Plugins

|

||||

### Device plugins

|

||||

|

||||

[Flex Volumes](https://git.k8s.io/design-proposals-archive/storage/flexvolume-deployment.md)

|

||||

allow users to mount volume types without built-in support by having the kubelet call a binary

|

||||

plugin to mount the volume.

|

||||

|

||||

FlexVolume is deprecated since Kubernetes v1.23. The out-of-tree CSI driver is the recommended way

|

||||

to write volume drivers in Kubernetes. See

|

||||

[Kubernetes Volume Plugin FAQ for Storage Vendors](https://github.com/kubernetes/community/blob/master/sig-storage/volume-plugin-faq.md#kubernetes-volume-plugin-faq-for-storage-vendors)

|

||||

for more information.

|

||||

|

||||

### Device Plugins

|

||||

|

||||

Device plugins allow a node to discover new Node resources (in addition to the

|

||||

_Device plugins_ allow a node to discover new Node resources (in addition to the

|

||||

builtin ones like cpu and memory) via a

|

||||

[Device Plugin](/docs/concepts/extend-kubernetes/compute-storage-net/device-plugins/).

|

||||

|

||||

### Network Plugins

|

||||

### Storage plugins

|

||||

|

||||

Different networking fabrics can be supported via node-level

|

||||

[Network Plugins](/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins/).

|

||||

{{< glossary_tooltip text="Container Storage Interface" term_id="csi" >}} (CSI) plugins provide

|

||||

a way to extend Kubernetes with supports for new kinds of volumes. The volumes can be backed by

|

||||

durable external storage, or provide ephemeral storage, or they might offer a read-only interface

|

||||

to information using a filesystem paradigm.

|

||||

|

||||

### Scheduler Extensions

|

||||

Kubernetes also includes support for [FlexVolume](/docs/concepts/storage/volumes/#flexvolume) plugins,

|

||||

which are deprecated since Kubernetes v1.23 (in favour of CSI).

|

||||

|

||||

FlexVolume plugins allow users to mount volume types that aren't natively supported by Kubernetes. When

|

||||

you run a Pod that relies on FlexVolume storage, the kubelet calls a binary plugin to mount the volume.

|

||||

The archived [FlexVolume](https://git.k8s.io/design-proposals-archive/storage/flexvolume-deployment.md)

|

||||

design proposal has more detail on this approach.

|

||||

|

||||

The [Kubernetes Volume Plugin FAQ for Storage Vendors](https://github.com/kubernetes/community/blob/master/sig-storage/volume-plugin-faq.md#kubernetes-volume-plugin-faq-for-storage-vendors)

|

||||

includes general information on storage plugins.

|

||||

|

||||

### Network plugins

|

||||

|

||||

Your Kubernetes cluster needs a _network plugin_ in order to have a working Pod network

|

||||

and to support other aspects of the Kubernetes network model.

|

||||

|

||||

[Network Plugins](/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins/)

|

||||

allow Kubernetes to work with different networking topologies and technologies.

|

||||

|

||||

## Scheduling extensions

|

||||

|

||||

The scheduler is a special type of controller that watches pods, and assigns

|

||||

pods to nodes. The default scheduler can be replaced entirely, while

|

||||

|

|

@ -250,19 +294,30 @@ can run at the same time.

|

|||

This is a significant undertaking, and almost all Kubernetes users find they

|

||||

do not need to modify the scheduler.

|

||||

|

||||

The scheduler also supports a

|

||||

You can control which [scheduling plugins](/docs/reference/scheduling/config/#scheduling-plugins)

|

||||

are active, or associate sets of plugins with different named [scheduler profiles](/docs/reference/scheduling/config/#multiple-profiles).

|

||||

You can also write your own plugin that integrates with one or more of the kube-scheduler's

|

||||

[extension points](/docs/concepts/scheduling-eviction/scheduling-framework/#extension-points).

|

||||

|

||||

Finally, the built in `kube-scheduler` component supports a

|

||||

[webhook](https://git.k8s.io/design-proposals-archive/scheduling/scheduler_extender.md)

|

||||

that permits a webhook backend (scheduler extension) to filter and prioritize

|

||||

the nodes chosen for a pod.

|

||||

that permits a remote HTTP backend (scheduler extension) to filter and / or prioritize

|

||||

the nodes that the kube-scheduler chooses for a pod.

|

||||

|

||||

{{< note >}}

|

||||

You can only affect node filtering

|

||||

and node prioritization with a scheduler extender webhook; other extension points are

|

||||

not available through the webhook integration.

|

||||

{{< /note >}}

|

||||

|

||||

## {{% heading "whatsnext" %}}

|

||||

|

||||

|

||||

* Learn more about [Custom Resources](/docs/concepts/extend-kubernetes/api-extension/custom-resources/)

|

||||

* Learn about [Dynamic admission control](/docs/reference/access-authn-authz/extensible-admission-controllers/)

|

||||

* Learn more about Infrastructure extensions

|

||||

* [Network Plugins](/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins/)

|

||||

* Learn more about infrastructure extensions

|

||||

* [Device Plugins](/docs/concepts/extend-kubernetes/compute-storage-net/device-plugins/)

|

||||

* [Network Plugins](/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins/)

|

||||

* CSI [storage plugins](https://kubernetes-csi.github.io/docs/)

|

||||

* Learn about [kubectl plugins](/docs/tasks/extend-kubectl/kubectl-plugins/)

|

||||

* Learn more about [Custom Resources](/docs/concepts/extend-kubernetes/api-extension/custom-resources/)

|

||||

* Learn more about [Extension API Servers](/docs/concepts/extend-kubernetes/api-extension/apiserver-aggregation/)

|

||||

* Learn about [Dynamic admission control](/docs/reference/access-authn-authz/extensible-admission-controllers/)

|

||||

* Learn about the [Operator pattern](/docs/concepts/extend-kubernetes/operator/)

|

||||

|

||||

|

|

|

|||

|

|

@ -126,6 +126,17 @@ CRDs allow users to create new types of resources without adding another API ser

|

|||

|

||||

Regardless of how they are installed, the new resources are referred to as Custom Resources to distinguish them from built-in Kubernetes resources (like pods).

|

||||

|

||||

{{< note >}}

|

||||

Avoid using a Custom Resource as data storage for application, end user, or monitoring data:

|

||||

architecture designs that store application data within the Kubernetes API typically represent

|

||||

a design that is too closely coupled.

|

||||

|

||||

Architecturally, [cloud native](https://www.cncf.io/about/faq/#what-is-cloud-native) application architectures

|

||||

favor loose coupling between components. If part of your workload requires a backing service for

|

||||

its routine operation, run that backing service as a component or consume it as an external service.

|

||||

This way, your workload does not rely on the Kubernetes API for its normal operation.

|

||||

{{< /note >}}

|

||||

|

||||

## CustomResourceDefinitions

|

||||

|

||||

The [CustomResourceDefinition](/docs/tasks/extend-kubernetes/custom-resources/custom-resource-definitions/)

|

||||

|

|

|

|||

|

|

@ -1,4 +1,44 @@

|

|||

---

|

||||

title: Compute, Storage, and Networking Extensions

|

||||

weight: 30

|

||||

no_list: true

|

||||

---

|

||||

|

||||

This section covers extensions to your cluster that do not come as part as Kubernetes itself.

|

||||

You can use these extensions to enhance the nodes in your cluster, or to provide the network

|

||||

fabric that links Pods together.

|

||||

|

||||

* [CSI](/docs/concepts/storage/volumes/#csi) and [FlexVolume](/docs/concepts/storage/volumes/#flexvolume) storage plugins

|

||||

|

||||

{{< glossary_tooltip text="Container Storage Interface" term_id="csi" >}} (CSI) plugins

|

||||

provide a way to extend Kubernetes with supports for new kinds of volumes. The volumes can

|

||||

be backed by durable external storage, or provide ephemeral storage, or they might offer a

|

||||

read-only interface to information using a filesystem paradigm.

|

||||

|

||||

Kubernetes also includes support for [FlexVolume](/docs/concepts/storage/volumes/#flexvolume)

|

||||

plugins, which are deprecated since Kubernetes v1.23 (in favour of CSI).

|

||||

|

||||

FlexVolume plugins allow users to mount volume types that aren't natively

|

||||

supported by Kubernetes. When you run a Pod that relies on FlexVolume

|

||||

storage, the kubelet calls a binary plugin to mount the volume. The archived

|

||||

[FlexVolume](https://git.k8s.io/design-proposals-archive/storage/flexvolume-deployment.md)

|

||||

design proposal has more detail on this approach.

|

||||

|

||||

The [Kubernetes Volume Plugin FAQ for Storage Vendors](https://github.com/kubernetes/community/blob/master/sig-storage/volume-plugin-faq.md#kubernetes-volume-plugin-faq-for-storage-vendors)

|

||||

includes general information on storage plugins.

|

||||

|

||||

* [Device plugins](/docs/concepts/extend-kubernetes/compute-storage-net/device-plugins/)

|

||||

|

||||

Device plugins allow a node to discover new Node facilities (in addition to the

|

||||

built-in node resources such as `cpu` and `memory`), and provide these custom node-local

|

||||

facilities to Pods that request them.

|

||||

|

||||

* [Network plugins](/docs/concepts/extend-kubernetes/compute-storage-net/network-plugins/)

|

||||

|

||||

A network plugin allow Kubernetes to work with different networking topologies and technologies.

|

||||

Your Kubernetes cluster needs a _network plugin_ in order to have a working Pod network

|

||||

and to support other aspects of the Kubernetes network model.

|

||||

|

||||

Kubernetes {{< skew currentVersion >}} is compatible with {{< glossary_tooltip text="CNI" term_id="cni" >}}

|

||||

network plugins.

|

||||

|

||||

|

|

|

|||

|

|

@ -212,7 +212,7 @@ work between Windows and Linux:

|

|||

* `securityContext.capabilities` -

|

||||

POSIX capabilities are not implemented on Windows

|

||||

* `securityContext.privileged` -

|

||||

Windows doesn't support privileged containers

|

||||

Windows doesn't support privileged containers, use [HostProcess Containers](/docs/tasks/configure-pod-container/create-hostprocess-pod/) instead

|

||||

* `securityContext.procMount` -

|

||||

Windows doesn't have a `/proc` filesystem

|

||||

* `securityContext.readOnlyRootFilesystem` -

|

||||

|

|

|

|||

|

|

@ -15,7 +15,7 @@ This page provides an overview of best practices when it comes to enforcing

|

|||

|

||||

## Using the built-in Pod Security Admission Controller

|

||||

|

||||

{{< feature-state for_k8s_version="v1.23" state="beta" >}}

|

||||

{{< feature-state for_k8s_version="v1.25" state="stable" >}}

|

||||

|

||||

The [Pod Security Admission Controller](/docs/reference/access-authn-authz/admission-controllers/#podsecurity)

|

||||

intends to replace the deprecated PodSecurityPolicies.

|

||||

|

|

|

|||

|

|

@ -1,5 +1,5 @@

|

|||

---

|

||||

title: Installing Kubernetes with kops

|

||||

title: Installing Kubernetes with kOps

|

||||

content_type: task

|

||||

weight: 20

|

||||

---

|

||||

|

|

@ -7,30 +7,25 @@ weight: 20

|

|||

<!-- overview -->

|

||||

|

||||

This quickstart shows you how to easily install a Kubernetes cluster on AWS.

|

||||

It uses a tool called [`kops`](https://github.com/kubernetes/kops).

|

||||

It uses a tool called [`kOps`](https://github.com/kubernetes/kops).

|

||||

|

||||

kops is an automated provisioning system:

|

||||

`kOps` is an automated provisioning system:

|

||||

|

||||

* Fully automated installation

|

||||

* Uses DNS to identify clusters

|

||||

* Self-healing: everything runs in Auto-Scaling Groups

|

||||

* Multiple OS support (Debian, Ubuntu 16.04 supported, CentOS & RHEL, Amazon Linux and CoreOS) - see the [images.md](https://github.com/kubernetes/kops/blob/master/docs/operations/images.md)

|

||||

* Multiple OS support (Amazon Linux, Debian, Flatcar, RHEL, Rocky and Ubuntu) - see the [images.md](https://github.com/kubernetes/kops/blob/master/docs/operations/images.md)

|

||||

* High-Availability support - see the [high_availability.md](https://github.com/kubernetes/kops/blob/master/docs/operations/high_availability.md)

|

||||

* Can directly provision, or generate terraform manifests - see the [terraform.md](https://github.com/kubernetes/kops/blob/master/docs/terraform.md)

|

||||

|

||||

|

||||

|

||||

## {{% heading "prerequisites" %}}

|

||||

|

||||

|

||||

* You must have [kubectl](/docs/tasks/tools/) installed.

|

||||

|

||||

* You must [install](https://github.com/kubernetes/kops#installing) `kops` on a 64-bit (AMD64 and Intel 64) device architecture.

|

||||

|

||||

* You must have an [AWS account](https://docs.aws.amazon.com/polly/latest/dg/setting-up.html), generate [IAM keys](https://docs.aws.amazon.com/general/latest/gr/aws-sec-cred-types.html#access-keys-and-secret-access-keys) and [configure](https://docs.aws.amazon.com/cli/latest/userguide/cli-chap-configure.html#cli-quick-configuration) them. The IAM user will need [adequate permissions](https://github.com/kubernetes/kops/blob/master/docs/getting_started/aws.md#setup-iam-user).

|

||||

|

||||

|

||||

|

||||

<!-- steps -->

|

||||

|

||||

## Creating a cluster

|

||||

|

|

@ -121,7 +116,6 @@ brew update && brew install kops

|

|||

{{% /tab %}}

|

||||

{{< /tabs >}}

|

||||

|

||||

|

||||

### (2/5) Create a route53 domain for your cluster

|

||||

|

||||

kops uses DNS for discovery, both inside the cluster and outside, so that you can reach the kubernetes API server

|

||||

|

|

@ -178,7 +172,6 @@ the S3 bucket name.

|

|||

* You can `export KOPS_STATE_STORE=s3://clusters.dev.example.com` and then kops will use this location by default.

|

||||

We suggest putting this in your bash profile or similar.

|

||||

|

||||

|

||||

### (4/5) Build your cluster configuration

|

||||

|

||||

Run `kops create cluster` to create your cluster configuration:

|

||||

|

|

@ -201,10 +194,9 @@ set of instances, which will be registered as kubernetes nodes. On AWS this is

|

|||

You can have several instance groups, for example if you wanted nodes that are a mix of spot and on-demand instances, or

|

||||

GPU and non-GPU instances.

|

||||

|

||||

|

||||

### (5/5) Create the cluster in AWS

|

||||

|

||||

Run "kops update cluster" to create your cluster in AWS:

|

||||

Run `kops update cluster` to create your cluster in AWS:

|

||||

|

||||

`kops update cluster useast1.dev.example.com --yes`

|

||||

|

||||

|

|

@ -226,13 +218,9 @@ See the [list of add-ons](/docs/concepts/cluster-administration/addons/) to expl

|

|||

|

||||

* To delete your cluster: `kops delete cluster useast1.dev.example.com --yes`

|

||||

|

||||

|

||||

|

||||

## {{% heading "whatsnext" %}}

|

||||

|

||||

|

||||

* Learn more about Kubernetes [concepts](/docs/concepts/) and [`kubectl`](/docs/reference/kubectl/).

|

||||

* Learn more about `kops` [advanced usage](https://kops.sigs.k8s.io/) for tutorials, best practices and advanced configuration options.

|

||||

* Follow `kops` community discussions on Slack: [community discussions](https://github.com/kubernetes/kops#other-ways-to-communicate-with-the-contributors)

|

||||

* Contribute to `kops` by addressing or raising an issue [GitHub Issues](https://github.com/kubernetes/kops/issues)

|

||||

|

||||

* Learn more about `kOps` [advanced usage](https://kops.sigs.k8s.io/) for tutorials, best practices and advanced configuration options.

|

||||

* Follow `kOps` community discussions on Slack: [community discussions](https://github.com/kubernetes/kops#other-ways-to-communicate-with-the-contributors).

|

||||

* Contribute to `kOps` by addressing or raising an issue [GitHub Issues](https://github.com/kubernetes/kops/issues).

|

||||

|

|

|

|||

|

|

@ -345,7 +345,12 @@ ETCDCTL_API=3 etcdctl --endpoints 10.2.0.9:2379 snapshot restore snapshotdb

|

|||

```

|

||||

Another example for restoring using etcdctl options:

|

||||

```shell

|

||||

ETCDCTL_API=3 etcdctl --data-dir <data-dir-location> snapshot restore snapshotdb

|

||||

ETCDCTL_API=3 etcdctl snapshot restore --data-dir <data-dir-location> snapshotdb

|

||||

```

|

||||

Yet another example would be to first export the environment variable

|

||||

```shell

|

||||

export ETCDCTL_API=3

|

||||

etcdctl snapshot restore --data-dir <data-dir-location> snapshotdb

|

||||

```

|

||||

|

||||

For more information and examples on restoring a cluster from a snapshot file, see

|

||||

|

|

|

|||

|

|

@ -17,8 +17,6 @@ DNS resolution process in your cluster.

|

|||

{{< include "task-tutorial-prereqs.md" >}}

|

||||

|

||||

Your cluster must be running the CoreDNS add-on.

|

||||

[Migrating to CoreDNS](/docs/tasks/administer-cluster/coredns/#migrating-to-coredns)

|

||||

explains how to use `kubeadm` to migrate from `kube-dns`.

|

||||

|

||||

{{% version-check %}}

|

||||

|

||||

|

|

@ -27,25 +25,27 @@ explains how to use `kubeadm` to migrate from `kube-dns`.

|

|||

## Introduction

|

||||

|

||||

DNS is a built-in Kubernetes service launched automatically

|

||||

using the _addon manager_

|

||||

[cluster add-on](http://releases.k8s.io/master/cluster/addons/README.md).

|

||||

|

||||

As of Kubernetes v1.12, CoreDNS is the recommended DNS Server, replacing kube-dns. If your cluster

|

||||

originally used kube-dns, you may still have `kube-dns` deployed rather than CoreDNS.

|

||||

using the _addon manager_ [cluster add-on](http://releases.k8s.io/master/cluster/addons/README.md).

|

||||

|

||||

{{< note >}}

|

||||

The CoreDNS Service is named `kube-dns` in the `metadata.name` field.

|

||||

This is so that there is greater interoperability with workloads that relied on the legacy `kube-dns` Service name to resolve addresses internal to the cluster. Using a Service named `kube-dns` abstracts away the implementation detail of which DNS provider is running behind that common name.

|

||||

The intent is to ensure greater interoperability with workloads that relied on

|

||||

the legacy `kube-dns` Service name to resolve addresses internal to the cluster.

|

||||

Using a Service named `kube-dns` abstracts away the implementation detail of

|

||||

which DNS provider is running behind that common name.

|

||||

{{< /note >}}

|

||||

|

||||

If you are running CoreDNS as a Deployment, it will typically be exposed as a Kubernetes Service with a static IP address.

|

||||

The kubelet passes DNS resolver information to each container with the `--cluster-dns=<dns-service-ip>` flag.

|

||||

If you are running CoreDNS as a Deployment, it will typically be exposed as

|

||||

a Kubernetes Service with a static IP address.

|

||||

The kubelet passes DNS resolver information to each container with the

|

||||

`--cluster-dns=<dns-service-ip>` flag.

|

||||

|

||||

DNS names also need domains. You configure the local domain in the kubelet

|

||||

with the flag `--cluster-domain=<default-local-domain>`.

|

||||

|

||||

The DNS server supports forward lookups (A and AAAA records), port lookups (SRV records), reverse IP address lookups (PTR records),

|

||||

and more. For more information, see [DNS for Services and Pods](/docs/concepts/services-networking/dns-pod-service/).

|

||||

The DNS server supports forward lookups (A and AAAA records), port lookups (SRV records),

|

||||

reverse IP address lookups (PTR records), and more. For more information, see

|

||||

[DNS for Services and Pods](/docs/concepts/services-networking/dns-pod-service/).

|

||||

|

||||

If a Pod's `dnsPolicy` is set to `default`, it inherits the name resolution

|

||||

configuration from the node that the Pod runs on. The Pod's DNS resolution

|

||||

|

|

@ -59,15 +59,16 @@ inheriting DNS. Set it to a valid file path to specify a file other than

|

|||

|

||||

## CoreDNS

|

||||

|

||||

CoreDNS is a general-purpose authoritative DNS server that can serve as cluster DNS, complying with the [dns specifications](https://github.com/kubernetes/dns/blob/master/docs/specification.md).

|

||||

CoreDNS is a general-purpose authoritative DNS server that can serve as cluster DNS,

|

||||

complying with the [DNS specifications](https://github.com/kubernetes/dns/blob/master/docs/specification.md).

|

||||

|

||||

### CoreDNS ConfigMap options

|

||||

|

||||

CoreDNS is a DNS server that is modular and pluggable, and each plugin adds new functionality to CoreDNS.

|

||||

This can be configured by maintaining a [Corefile](https://coredns.io/2017/07/23/corefile-explained/), which is the CoreDNS

|

||||

configuration file. As a cluster administrator, you can modify the

|

||||

{{< glossary_tooltip text="ConfigMap" term_id="configmap" >}} for the CoreDNS Corefile to change how DNS service discovery

|

||||

behaves for that cluster.

|

||||

CoreDNS is a DNS server that is modular and pluggable, with plugins adding new functionalities.

|

||||

The CoreDNS server can be configured by maintaining a [Corefile](https://coredns.io/2017/07/23/corefile-explained/),

|

||||

which is the CoreDNS configuration file. As a cluster administrator, you can modify the

|

||||

{{< glossary_tooltip text="ConfigMap" term_id="configmap" >}} for the CoreDNS Corefile to

|

||||

change how DNS service discovery behaves for that cluster.

|

||||

|

||||

In Kubernetes, CoreDNS is installed with the following default Corefile configuration:

|

||||

|

||||

|

|

@ -102,35 +103,57 @@ data:

|

|||

The Corefile configuration includes the following [plugins](https://coredns.io/plugins/) of CoreDNS:

|

||||

|

||||

* [errors](https://coredns.io/plugins/errors/): Errors are logged to stdout.

|

||||

* [health](https://coredns.io/plugins/health/): Health of CoreDNS is reported to `http://localhost:8080/health`. In this extended syntax `lameduck` will make the process unhealthy then wait for 5 seconds before the process is shut down.

|

||||

* [ready](https://coredns.io/plugins/ready/): An HTTP endpoint on port 8181 will return 200 OK, when all plugins that are able to signal readiness have done so.

|

||||

* [kubernetes](https://coredns.io/plugins/kubernetes/): CoreDNS will reply to DNS queries based on IP of the services and pods of Kubernetes. You can find [more details](https://coredns.io/plugins/kubernetes/) about that plugin on the CoreDNS website. `ttl` allows you to set a custom TTL for responses. The default is 5 seconds. The minimum TTL allowed is 0 seconds, and the maximum is capped at 3600 seconds. Setting TTL to 0 will prevent records from being cached.

|

||||

The `pods insecure` option is provided for backward compatibility with _kube-dns_. You can use the `pods verified` option, which returns an A record only if there exists a pod in same namespace with matching IP. The `pods disabled` option can be used if you don't use pod records.

|

||||

* [prometheus](https://coredns.io/plugins/metrics/): Metrics of CoreDNS are available at `http://localhost:9153/metrics` in [Prometheus](https://prometheus.io/) format (also known as OpenMetrics).

|

||||

* [forward](https://coredns.io/plugins/forward/): Any queries that are not within the cluster domain of Kubernetes will be forwarded to predefined resolvers (/etc/resolv.conf).

|

||||

* [health](https://coredns.io/plugins/health/): Health of CoreDNS is reported to

|

||||

`http://localhost:8080/health`. In this extended syntax `lameduck` will make theuprocess

|

||||

unhealthy then wait for 5 seconds before the process is shut down.

|

||||

* [ready](https://coredns.io/plugins/ready/): An HTTP endpoint on port 8181 will return 200 OK,

|

||||

when all plugins that are able to signal readiness have done so.

|

||||

* [kubernetes](https://coredns.io/plugins/kubernetes/): CoreDNS will reply to DNS queries

|

||||

based on IP of the Services and Pods. You can find [more details](https://coredns.io/plugins/kubernetes/)

|

||||

about this plugin on the CoreDNS website.

|

||||

- `ttl` allows you to set a custom TTL for responses. The default is 5 seconds.

|

||||

The minimum TTL allowed is 0 seconds, and the maximum is capped at 3600 seconds.

|

||||

Setting TTL to 0 will prevent records from being cached.

|

||||

- The `pods insecure` option is provided for backward compatibility with `kube-dns`.

|

||||

- You can use the `pods verified` option, which returns an A record only if there exists a pod

|

||||

in the same namespace with a matching IP.

|

||||

- The `pods disabled` option can be used if you don't use pod records.

|

||||

* [prometheus](https://coredns.io/plugins/metrics/): Metrics of CoreDNS are available at

|

||||

`http://localhost:9153/metrics` in the [Prometheus](https://prometheus.io/) format

|

||||

(also known as OpenMetrics).

|

||||

* [forward](https://coredns.io/plugins/forward/): Any queries that are not within the Kubernetes

|

||||

cluster domain are forwarded to predefined resolvers (/etc/resolv.conf).

|

||||

* [cache](https://coredns.io/plugins/cache/): This enables a frontend cache.

|

||||

* [loop](https://coredns.io/plugins/loop/): Detects simple forwarding loops and halts the CoreDNS process if a loop is found.

|

||||

* [reload](https://coredns.io/plugins/reload): Allows automatic reload of a changed Corefile. After you edit the ConfigMap configuration, allow two minutes for your changes to take effect.

|

||||

* [loadbalance](https://coredns.io/plugins/loadbalance): This is a round-robin DNS loadbalancer that randomizes the order of A, AAAA, and MX records in the answer.

|

||||

* [loop](https://coredns.io/plugins/loop/): Detects simple forwarding loops and

|

||||

halts the CoreDNS process if a loop is found.

|

||||

* [reload](https://coredns.io/plugins/reload): Allows automatic reload of a changed Corefile.

|

||||

After you edit the ConfigMap configuration, allow two minutes for your changes to take effect.

|

||||

* [loadbalance](https://coredns.io/plugins/loadbalance): This is a round-robin DNS loadbalancer

|

||||

that randomizes the order of A, AAAA, and MX records in the answer.

|

||||

|

||||

You can modify the default CoreDNS behavior by modifying the ConfigMap.

|

||||

|

||||

### Configuration of Stub-domain and upstream nameserver using CoreDNS

|

||||

|

||||

CoreDNS has the ability to configure stubdomains and upstream nameservers using the [forward plugin](https://coredns.io/plugins/forward/).

|

||||

CoreDNS has the ability to configure stub-domains and upstream nameservers

|

||||

using the [forward plugin](https://coredns.io/plugins/forward/).

|

||||

|

||||

#### Example

|

||||

If a cluster operator has a [Consul](https://www.consul.io/) domain server located at 10.150.0.1, and all Consul names have the suffix .consul.local. To configure it in CoreDNS, the cluster administrator creates the following stanza in the CoreDNS ConfigMap.

|

||||

|

||||

If a cluster operator has a [Consul](https://www.consul.io/) domain server located at "10.150.0.1",

|

||||

and all Consul names have the suffix ".consul.local". To configure it in CoreDNS,

|

||||

the cluster administrator creates the following stanza in the CoreDNS ConfigMap.

|

||||

|

||||

```

|

||||

consul.local:53 {

|

||||

errors

|

||||

cache 30

|

||||

forward . 10.150.0.1

|

||||

}

|

||||

errors

|

||||

cache 30

|

||||

forward . 10.150.0.1

|

||||

}

|

||||

```

|

||||

|

||||

To explicitly force all non-cluster DNS lookups to go through a specific nameserver at 172.16.0.1, point the `forward` to the nameserver instead of `/etc/resolv.conf`

|

||||

To explicitly force all non-cluster DNS lookups to go through a specific nameserver at 172.16.0.1,

|

||||

point the `forward` to the nameserver instead of `/etc/resolv.conf`

|

||||

|

||||

```

|

||||

forward . 172.16.0.1

|

||||

|

|

@ -167,88 +190,12 @@ data:

|

|||

}

|

||||

```

|

||||

|

||||

The `kubeadm` tool supports automatic translation from the kube-dns ConfigMap

|

||||

to the equivalent CoreDNS ConfigMap.

|

||||

|

||||

{{< note >}}

|

||||

While kube-dns accepts an FQDN for stubdomain and nameserver (eg: ns.foo.com), CoreDNS does not support this feature.

|

||||

CoreDNS does not support FQDNs for stub-domains and nameservers (eg: "ns.foo.com").

|

||||

During translation, all FQDN nameservers will be omitted from the CoreDNS config.

|

||||

{{< /note >}}

|

||||

|

||||

## CoreDNS configuration equivalent to kube-dns

|

||||

|

||||

CoreDNS supports the features of kube-dns and more.

|

||||

A ConfigMap created for kube-dns to support `StubDomains`and `upstreamNameservers` translates to the `forward` plugin in CoreDNS.

|

||||

|

||||

### Example

|

||||

|

||||

This example ConfigMap for kube-dns specifies stubdomains and upstreamnameservers:

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

data:

|

||||

stubDomains: |

|

||||

{"abc.com" : ["1.2.3.4"], "my.cluster.local" : ["2.3.4.5"]}

|

||||

upstreamNameservers: |

|

||||

["8.8.8.8", "8.8.4.4"]

|

||||

kind: ConfigMap

|

||||

```

|

||||

|

||||

The equivalent configuration in CoreDNS creates a Corefile:

|

||||

|

||||

* For stubDomains:

|

||||

```yaml

|

||||

abc.com:53 {

|

||||

errors

|

||||

cache 30

|

||||

forward . 1.2.3.4

|

||||

}

|

||||

my.cluster.local:53 {

|

||||

errors

|

||||

cache 30

|

||||

forward . 2.3.4.5

|

||||

}

|

||||

```

|

||||

|

||||

The complete Corefile with the default plugins:

|

||||

|

||||

```

|

||||

.:53 {

|

||||

errors

|

||||

health

|

||||

kubernetes cluster.local in-addr.arpa ip6.arpa {

|

||||

pods insecure

|

||||

fallthrough in-addr.arpa ip6.arpa

|

||||

}

|

||||

federation cluster.local {

|

||||

foo foo.feddomain.com

|

||||

}

|

||||

prometheus :9153

|

||||

forward . 8.8.8.8 8.8.4.4

|

||||

cache 30

|

||||

}

|

||||

abc.com:53 {

|

||||

errors

|

||||

cache 30

|

||||

forward . 1.2.3.4

|

||||

}

|

||||

my.cluster.local:53 {

|

||||

errors

|

||||

cache 30

|

||||

forward . 2.3.4.5

|

||||

}

|

||||

```

|

||||

|

||||

## Migration to CoreDNS

|

||||

|

||||

To migrate from kube-dns to CoreDNS, a detailed

|

||||

[blog article](https://coredns.io/2018/05/21/migration-from-kube-dns-to-coredns/)

|

||||

is available to help users adapt CoreDNS in place of kube-dns.

|

||||

|

||||

You can also migrate using the official CoreDNS

|

||||

[deploy script](https://github.com/coredns/deployment/blob/master/kubernetes/deploy.sh).

|

||||

|

||||

|

||||

## {{% heading "whatsnext" %}}

|

||||

|

||||

- Read [Debugging DNS Resolution](/docs/tasks/administer-cluster/dns-debugging-resolution/)

|

||||

|

||||

|

|

|

|||

|

|

@ -88,7 +88,7 @@ would provision a network resource like a Google Compute Engine persistent disk,

|

|||

an NFS share, or an Amazon Elastic Block Store volume. Cluster administrators can also

|

||||

use [StorageClasses](/docs/reference/generated/kubernetes-api/{{< param "version" >}}/#storageclass-v1-storage-k8s-io)

|

||||

to set up

|

||||

[dynamic provisioning](/blog/2016/10/dynamic-provisioning-and-storage-in-kubernetes).

|

||||

[dynamic provisioning](/docs/concepts/storage/dynamic-provisioning/).

|

||||

|

||||

Here is the configuration file for the hostPath PersistentVolume:

|

||||

|

||||

|

|

|

|||

|

|

@ -56,7 +56,7 @@ go get -u github.com/kubernetes/kompose

|

|||

{{% tab name="CentOS package" %}}

|

||||

|

||||

Kompose is in [EPEL](https://fedoraproject.org/wiki/EPEL) CentOS repository.

|

||||

If you don't have [EPEL](https://fedoraproject.org/wiki/EPEL) repository already installed and enabled you can do it by running `sudo yum install epel-release`

|

||||

If you don't have [EPEL](https://fedoraproject.org/wiki/EPEL) repository already installed and enabled you can do it by running `sudo yum install epel-release`.

|

||||

|

||||

If you have [EPEL](https://fedoraproject.org/wiki/EPEL) enabled in your system, you can install Kompose like any other package.

|

||||

|

||||

|

|

@ -76,7 +76,7 @@ sudo dnf -y install kompose

|

|||

{{% /tab %}}

|

||||

{{% tab name="Homebrew (macOS)" %}}

|

||||

|

||||

On macOS you can install latest release via [Homebrew](https://brew.sh):

|

||||

On macOS you can install the latest release via [Homebrew](https://brew.sh):

|

||||

|

||||

```bash

|

||||

brew install kompose

|

||||

|

|

@ -281,7 +281,7 @@ frontend-service.yaml mongodb-deployment.yaml redis-slave

|

|||

redis-master-deployment.yaml

|

||||

```

|

||||

|

||||

When multiple docker-compose files are provided the configuration is merged. Any configuration that is common will be over ridden by subsequent file.

|

||||

When multiple docker-compose files are provided the configuration is merged. Any configuration that is common will be overridden by subsequent file.

|

||||

|

||||

### OpenShift `kompose convert` example

|

||||

|

||||

|

|

@ -347,7 +347,7 @@ INFO Kubernetes file "redis-replicationcontroller.yaml" created

|

|||

INFO Kubernetes file "web-replicationcontroller.yaml" created

|

||||

```

|

||||

|

||||

The `*-replicationcontroller.yaml` files contain the Replication Controller objects. If you want to specify replicas (default is 1), use `--replicas` flag: `kompose convert --replication-controller --replicas 3`

|

||||

The `*-replicationcontroller.yaml` files contain the Replication Controller objects. If you want to specify replicas (default is 1), use `--replicas` flag: `kompose convert --replication-controller --replicas 3`.

|

||||

|

||||

```shell

|

||||

kompose convert --daemon-set

|

||||

|

|

@ -357,7 +357,7 @@ INFO Kubernetes file "redis-daemonset.yaml" created

|

|||

INFO Kubernetes file "web-daemonset.yaml" created

|

||||

```

|

||||

|

||||

The `*-daemonset.yaml` files contain the DaemonSet objects

|

||||

The `*-daemonset.yaml` files contain the DaemonSet objects.

|

||||

|

||||

If you want to generate a Chart to be used with [Helm](https://github.com/kubernetes/helm) run:

|

||||

|

||||

|

|

@ -396,44 +396,44 @@ The chart structure is aimed at providing a skeleton for building your Helm char

|

|||

|

||||

- `kompose.service.type` defines the type of service to be created.

|

||||

|

||||

For example:

|

||||

For example:

|

||||

|

||||

```yaml

|

||||

version: "2"

|

||||

services:

|

||||

nginx:

|

||||

image: nginx

|

||||

dockerfile: foobar

|

||||

build: ./foobar

|

||||

cap_add:

|

||||

- ALL

|

||||

container_name: foobar

|

||||

labels:

|

||||

kompose.service.type: nodeport

|

||||

```

|

||||

```yaml

|

||||

version: "2"

|

||||

services:

|

||||

nginx:

|

||||

image: nginx

|

||||

dockerfile: foobar

|

||||

build: ./foobar

|

||||

cap_add:

|

||||

- ALL

|

||||

container_name: foobar

|

||||

labels:

|

||||

kompose.service.type: nodeport

|

||||

```

|

||||

|

||||

- `kompose.service.expose` defines if the service needs to be made accessible from outside the cluster or not. If the value is set to "true", the provider sets the endpoint automatically, and for any other value, the value is set as the hostname. If multiple ports are defined in a service, the first one is chosen to be the exposed.

|