viz tools realease

122

README.md

|

|

@ -93,6 +93,13 @@ Pull requests are welcome!

|

|||

```

|

||||

* other datasets can be used i.e. `--dataset blizzard` for Blizzard data

|

||||

* for the mailabs dataset, do `preprocess.py --help` for options. Also note that mailabs uses sample_size of 16000

|

||||

* you may want to create your own preprocessing script that works for your dataset. You can follow examples from preprocess.py and ./datasets

|

||||

|

||||

|

||||

preprocess.py creates a train.txt and metadata.txt. train.txt is the file you use to pass to train.py input parameter. metadata.txt can be used as a reference to get max input lengh, max output lengh, and how many hours is your dataset.

|

||||

|

||||

**NOTE**

|

||||

modify hparams.py to cater to your dataset.

|

||||

|

||||

4. **Train a model**

|

||||

```

|

||||

|

|

@ -101,9 +108,9 @@ Pull requests are welcome!

|

|||

|

||||

Tunable hyperparameters are found in [hparams.py](hparams.py). You can adjust these at the command

|

||||

line using the `--hparams` flag, for example `--hparams="batch_size=16,outputs_per_step=2"`.

|

||||

Hyperparameters should generally be set to the same values at both training and eval time. I highly recommend

|

||||

setting the params in the hparams.py file to gurantee consistentcy during preprocessing, training, evaluating,

|

||||

and running the demo server.

|

||||

Hyperparameters should generally be set to the same values at both training and eval time. **I highly recommend

|

||||

setting the params in the hparams.py file to guarantee consistency during preprocessing, training, evaluating,

|

||||

and running the demo server. The --hparams flag will be deprecated soon**

|

||||

|

||||

|

||||

5. **Monitor with Tensorboard** (optional)

|

||||

|

|

@ -126,3 +133,112 @@ Pull requests are welcome!

|

|||

```

|

||||

If you set the `--hparams` flag when training, set the same value here.

|

||||

|

||||

7. **Analyzing Data**

|

||||

|

||||

You can visualize your data set after preprocessing the data. See more details info [here](#visualizing-your-data)

|

||||

|

||||

|

||||

## Notes and Common Issues

|

||||

|

||||

* [TCMalloc](http://goog-perftools.sourceforge.net/doc/tcmalloc.html) seems to improve

|

||||

training speed and avoids occasional slowdowns seen with the default allocator. You

|

||||

can enable it by installing it and setting `LD_PRELOAD=/usr/lib/libtcmalloc.so`. With TCMalloc,

|

||||

you can get around 1.1 sec/step on a GTX 1080Ti.

|

||||

|

||||

* You can train with [CMUDict](http://www.speech.cs.cmu.edu/cgi-bin/cmudict) by downloading the

|

||||

dictionary to ~/tacotron/training and then passing the flag `--hparams="use_cmudict=True"` to

|

||||

train.py. This will allow you to pass ARPAbet phonemes enclosed in curly braces at eval

|

||||

time to force a particular pronunciation, e.g. `Turn left on {HH AW1 S S T AH0 N} Street.`

|

||||

|

||||

* If you pass a Slack incoming webhook URL as the `--slack_url` flag to train.py, it will send

|

||||

you progress updates every 1000 steps.

|

||||

|

||||

* Occasionally, you may see a spike in loss and the model will forget how to attend (the

|

||||

alignments will no longer make sense). Although it will recover eventually, it may

|

||||

save time to restart at a checkpoint prior to the spike by passing the

|

||||

`--restore_step=150000` flag to train.py (replacing 150000 with a step number prior to the

|

||||

spike). **Update**: a recent [fix](https://github.com/keithito/tacotron/pull/7) to gradient

|

||||

clipping by @candlewill may have fixed this.

|

||||

|

||||

* During eval and training, audio length is limited to `max_iters * outputs_per_step * frame_shift_ms`

|

||||

milliseconds. With the defaults (max_iters=200, outputs_per_step=5, frame_shift_ms=12.5), this is

|

||||

12.5 seconds.

|

||||

|

||||

If your training examples are longer, you will see an error like this:

|

||||

`Incompatible shapes: [32,1340,80] vs. [32,1000,80]`

|

||||

|

||||

To fix this, you can set a larger value of `max_iters` by passing `--hparams="max_iters=300"` to

|

||||

train.py (replace "300" with a value based on how long your audio is and the formula above).

|

||||

|

||||

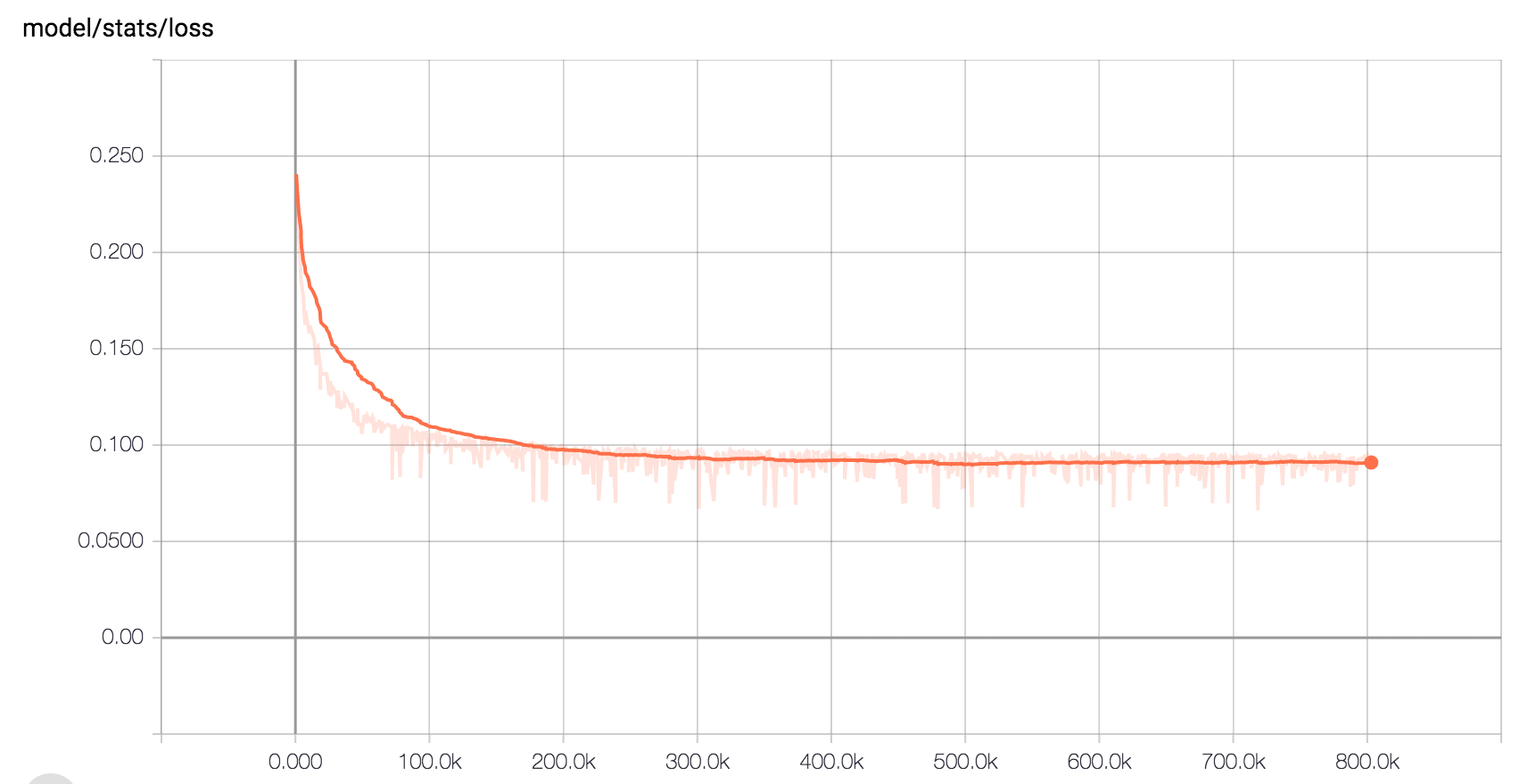

* Here is the expected loss curve when training on LJ Speech with the default hyperparameters:

|

||||

|

||||

|

||||

## Other Implementations

|

||||

* By Alex Barron: https://github.com/barronalex/Tacotron

|

||||

* By Kyubyong Park: https://github.com/Kyubyong/tacotron

|

||||

|

||||

## Visualizing Your Data

|

||||

[analyze](./analyze/__main__.py) is a tool to visualize your dataset after preprocessing. This step is important to ensure quality in the voice generation.

|

||||

|

||||

Example

|

||||

```

|

||||

python analyze.py --train_file_path=~/tacotron/training/train.txt --save_to=~tacotron/visuals --cmu_dict_path=~/cmudict-0.7b

|

||||

```

|

||||

|

||||

cmu_dict_path is optional if you'd like to visualize the distribution of the phonenemes.

|

||||

|

||||

Analyze outputs 6 different plots.

|

||||

|

||||

### Average Seconds vs Character Lengths

|

||||

|

||||

|

||||

This tells you what your audio data looks like in the time perspective. This plot shows the average seconds of your audio sample per character length of the sample.

|

||||

|

||||

E.g So for all 50 character samples the average audio length is 3 seconds. Your data should show a linear pattern like the example above.

|

||||

|

||||

Having a linear pattern for time vs character lengths is important to ensure a consistent speech rate during audio generation.

|

||||

|

||||

Below is a bad example of average seconds vs character lengths in your dataset. You can see taht there is inconsistency towards the higher character lengths range. at 180, the average audio length was 8 seconds while at 185 the averate was 6.

|

||||

|

||||

|

||||

|

||||

### Median Seconds vs Character Lengths

|

||||

|

||||

|

||||

Another perspective for time, that plots the median.

|

||||

|

||||

### Mode Seconds vs Character Lengths

|

||||

|

||||

|

||||

Another perspective for time, that plots the mode.

|

||||

|

||||

### Standard Deviation vs Character Lengths

|

||||

|

||||

|

||||

Plots the standard deviation or spread of your dataset. The standard deviation should stay in a range no bigger then 0.8.

|

||||

|

||||

E.g For samples with 100 character lengths, the average audio length is 6 seconds. According to the chart above, 100 character lenghs has an std of about 0.6. That means most samples in the 100 character length range should be no more then 6.6 seconds and no less then 5.2 seconds.

|

||||

|

||||

Having a low standard deviation is important to ensure a consistent speech rate during audio generation.

|

||||

|

||||

Below is an example of a bad distribution of standard deviations.

|

||||

|

||||

|

||||

|

||||

### Number of Samples vs Character Lengths

|

||||

|

||||

|

||||

Plots the number of samples you have in the character lengths range.

|

||||

|

||||

E.g. For samples in the 100 character lengths range, there are about 125 samples of it.

|

||||

|

||||

It's important to keep this plot as normally distributed as possible so that the model has enough data to produce a natural speech rate. If this char is off balance, you may get weird speech rate during voice generation.

|

||||

|

||||

Below is an example of a bad distribution for number of samples. This distribution will generate sequences in the 25 - 100 character lengths really well, but anything pass that will have bad quality. In this example you may experience a speech up in speech rate as the model try to squish 150 characters in 3 seconds.

|

||||

|

||||

|

||||

|

||||

### Phonemes Distribution

|

||||

|

||||

|

||||

This only output if you use the `--cmu_dict_path` parameter. The X axis are the unique phonemes and the Y axis shows how many times that phoneme shows up in your dataset. We are still experimenting with how the distribution should look, but the theory is having a balanced distribution of phonemes will increase quality in pronounciation.

|

||||

218

analyze.py

|

|

@ -1 +1,217 @@

|

|||

# visualisation tools for mimic2

|

||||

# visualisation tools for mimic2

|

||||

import matplotlib.pyplot as plt

|

||||

from statistics import stdev, mode, mean, median

|

||||

from statistics import StatisticsError

|

||||

import argparse

|

||||

import glob

|

||||

import os

|

||||

import csv

|

||||

import copy

|

||||

import seaborn as sns

|

||||

import random

|

||||

from text.cmudict import CMUDict

|

||||

|

||||

def get_audio_seconds(frames):

|

||||

return (frames*12.5)/1000

|

||||

|

||||

|

||||

def append_data_statistics(meta_data):

|

||||

# get data statistics

|

||||

for char_cnt in meta_data:

|

||||

data = meta_data[char_cnt]["data"]

|

||||

audio_len_list = [d["audio_len"] for d in data]

|

||||

mean_audio_len = mean(audio_len_list)

|

||||

try:

|

||||

mode_audio_list = [round(d["audio_len"], 2) for d in data]

|

||||

mode_audio_len = mode(mode_audio_list)

|

||||

except StatisticsError:

|

||||

mode_audio_len = audio_len_list[0]

|

||||

median_audio_len = median(audio_len_list)

|

||||

|

||||

try:

|

||||

std = stdev(

|

||||

d["audio_len"] for d in data

|

||||

)

|

||||

except:

|

||||

std = 0

|

||||

|

||||

meta_data[char_cnt]["mean"] = mean_audio_len

|

||||

meta_data[char_cnt]["median"] = median_audio_len

|

||||

meta_data[char_cnt]["mode"] = mode_audio_len

|

||||

meta_data[char_cnt]["std"] = std

|

||||

return meta_data

|

||||

|

||||

|

||||

def process_meta_data(path):

|

||||

meta_data = {}

|

||||

|

||||

# load meta data

|

||||

with open(path, 'r') as f:

|

||||

data = csv.reader(f, delimiter='|')

|

||||

for row in data:

|

||||

frames = int(row[2])

|

||||

utt = row[3]

|

||||

audio_len = get_audio_seconds(frames)

|

||||

char_count = len(utt)

|

||||

if not meta_data.get(char_count):

|

||||

meta_data[char_count] = {

|

||||

"data": []

|

||||

}

|

||||

|

||||

meta_data[char_count]["data"].append(

|

||||

{

|

||||

"utt": utt,

|

||||

"frames": frames,

|

||||

"audio_len": audio_len,

|

||||

"row": "{}|{}|{}|{}".format(row[0], row[1], row[2], row[3])

|

||||

}

|

||||

)

|

||||

|

||||

meta_data = append_data_statistics(meta_data)

|

||||

|

||||

return meta_data

|

||||

|

||||

|

||||

def get_data_points(meta_data):

|

||||

x = [char_cnt for char_cnt in meta_data]

|

||||

y_avg = [meta_data[d]['mean'] for d in meta_data]

|

||||

y_mode = [meta_data[d]['mode'] for d in meta_data]

|

||||

y_median = [meta_data[d]['median'] for d in meta_data]

|

||||

y_std = [meta_data[d]['std'] for d in meta_data]

|

||||

y_num_samples = [len(meta_data[d]['data']) for d in meta_data]

|

||||

return {

|

||||

"x": x,

|

||||

"y_avg": y_avg,

|

||||

"y_mode": y_mode,

|

||||

"y_median": y_median,

|

||||

"y_std": y_std,

|

||||

"y_num_samples": y_num_samples

|

||||

}

|

||||

|

||||

|

||||

def save_training(file_path, meta_data):

|

||||

rows = []

|

||||

for char_cnt in meta_data:

|

||||

data = meta_data[char_cnt]['data']

|

||||

for d in data:

|

||||

rows.append(d['row'] + "\n")

|

||||

|

||||

random.shuffle(rows)

|

||||

with open(file_path, 'w+') as f:

|

||||

for row in rows:

|

||||

f.write(row)

|

||||

|

||||

|

||||

def plot(meta_data, save_path=None):

|

||||

save = False

|

||||

if save_path:

|

||||

save = True

|

||||

|

||||

graph_data = get_data_points(meta_data)

|

||||

x = graph_data['x']

|

||||

y_avg = graph_data['y_avg']

|

||||

y_std = graph_data['y_std']

|

||||

y_mode = graph_data['y_mode']

|

||||

y_median = graph_data['y_median']

|

||||

y_num_samples = graph_data['y_num_samples']

|

||||

|

||||

plt.figure()

|

||||

plt.plot(x, y_avg, 'ro')

|

||||

plt.xlabel("character lengths", fontsize=30)

|

||||

plt.ylabel("avg seconds", fontsize=30)

|

||||

if save:

|

||||

name = "char_len_vs_avg_secs"

|

||||

plt.savefig(os.path.join(save_path, name))

|

||||

|

||||

plt.figure()

|

||||

plt.plot(x, y_mode, 'ro')

|

||||

plt.xlabel("character lengths", fontsize=30)

|

||||

plt.ylabel("mode seconds", fontsize=30)

|

||||

if save:

|

||||

name = "char_len_vs_mode_secs"

|

||||

plt.savefig(os.path.join(save_path, name))

|

||||

|

||||

plt.figure()

|

||||

plt.plot(x, y_median, 'ro')

|

||||

plt.xlabel("character lengths", fontsize=30)

|

||||

plt.ylabel("median seconds", fontsize=30)

|

||||

if save:

|

||||

name = "char_len_vs_med_secs"

|

||||

plt.savefig(os.path.join(save_path, name))

|

||||

|

||||

plt.figure()

|

||||

plt.plot(x, y_std, 'ro')

|

||||

plt.xlabel("character lengths", fontsize=30)

|

||||

plt.ylabel("standard deviation", fontsize=30)

|

||||

if save:

|

||||

name = "char_len_vs_std"

|

||||

plt.savefig(os.path.join(save_path, name))

|

||||

|

||||

plt.figure()

|

||||

plt.plot(x, y_num_samples, 'ro')

|

||||

plt.xlabel("character lengths", fontsize=30)

|

||||

plt.ylabel("number of samples", fontsize=30)

|

||||

if save:

|

||||

name = "char_len_vs_num_samples"

|

||||

plt.savefig(os.path.join(save_path, name))

|

||||

|

||||

|

||||

def plot_phonemes(train_path, cmu_dict_path, save_path):

|

||||

cmudict = CMUDict(cmu_dict_path)

|

||||

|

||||

phonemes = {}

|

||||

|

||||

with open(train_path, 'r') as f:

|

||||

data = csv.reader(f, delimiter='|')

|

||||

phonemes["None"] = 0

|

||||

for row in data:

|

||||

words = row[3].split()

|

||||

for word in words:

|

||||

pho = cmudict.lookup(word)

|

||||

if pho:

|

||||

indie = pho[0].split()

|

||||

for nemes in indie:

|

||||

if phonemes.get(nemes):

|

||||

phonemes[nemes] += 1

|

||||

else:

|

||||

phonemes[nemes] = 1

|

||||

else:

|

||||

phonemes["None"] += 1

|

||||

|

||||

x, y = [], []

|

||||

for key in phonemes:

|

||||

x.append(key)

|

||||

y.append(phonemes[key])

|

||||

|

||||

plt.figure()

|

||||

plt.rcParams["figure.figsize"] = (50, 20)

|

||||

plot = sns.barplot(x, y)

|

||||

if save_path:

|

||||

fig = plot.get_figure()

|

||||

fig.savefig(os.path.join(save_path, "phoneme_dist"))

|

||||

|

||||

|

||||

def main():

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument(

|

||||

'--train_file_path', required=True,

|

||||

help='this is the path to the train.txt file that the preprocess.py script creates'

|

||||

)

|

||||

parser.add_argument(

|

||||

'--save_to', help='path to save charts of data to'

|

||||

)

|

||||

parser.add_argument(

|

||||

'--cmu_dict_path', help='give cmudict-0.7b to see phoneme distribution'

|

||||

)

|

||||

args = parser.parse_args()

|

||||

meta_data = process_meta_data(args.train_file_path)

|

||||

plt.rcParams["figure.figsize"] = (10, 5)

|

||||

plot(meta_data, save_path=args.save_to)

|

||||

if args.cmu_dict_path:

|

||||

plt.rcParams["figure.figsize"] = (30, 10)

|

||||

plot_phonemes(args.train_file_path, args.cmu_dict_path, args.save_to)

|

||||

|

||||

plt.show()

|

||||

|

||||

if __name__ == '__main__':

|

||||

main()

|

||||

|

After Width: | Height: | Size: 15 KiB |

|

After Width: | Height: | Size: 16 KiB |

|

After Width: | Height: | Size: 18 KiB |

|

After Width: | Height: | Size: 17 KiB |

|

After Width: | Height: | Size: 16 KiB |

|

After Width: | Height: | Size: 17 KiB |

|

After Width: | Height: | Size: 18 KiB |

|

After Width: | Height: | Size: 27 KiB |

|

After Width: | Height: | Size: 18 KiB |

|

|

@ -5,5 +5,6 @@ matplotlib==2.0.2

|

|||

numpy==1.14.3

|

||||

scipy==0.19.0

|

||||

tqdm==4.11.2

|

||||

seaborn

|

||||

flask_cors

|

||||

flask

|

||||